|

1 | | -# simple_neural_network_from_scratch |

| 1 | +# Coding a neural network for XOR logic from scratch |

2 | 2 | In this repository, I implemented a proof of concept of all my theoretical knowledge of neural network to code a simple neural network from scratch in Python without using any machine learning library. |

3 | 3 |

|

| 4 | +### Introduction: |

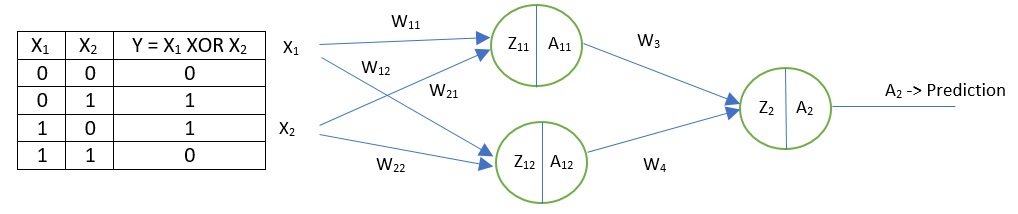

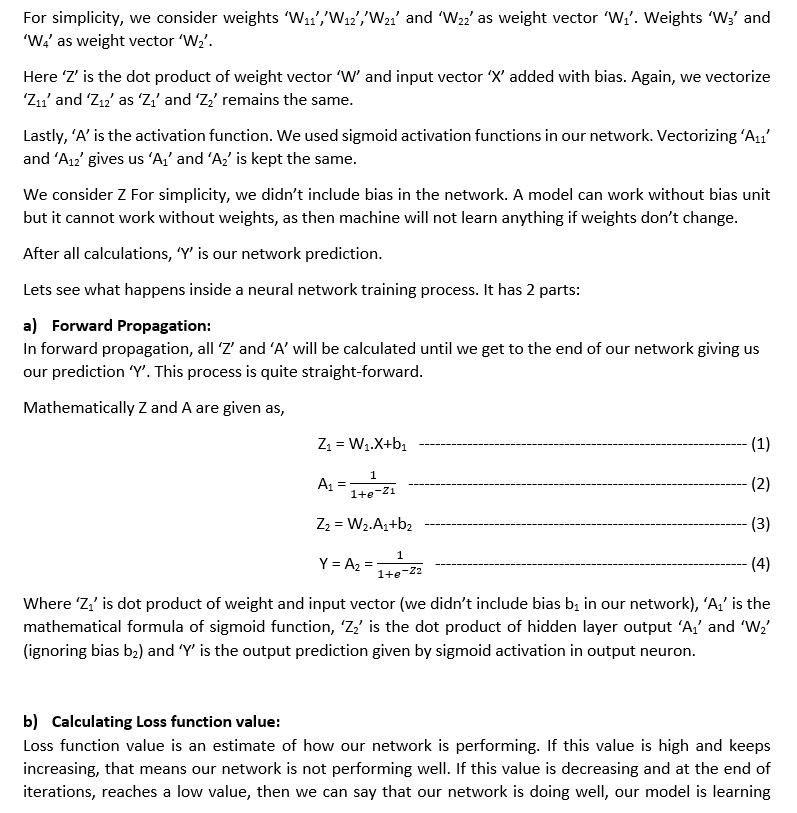

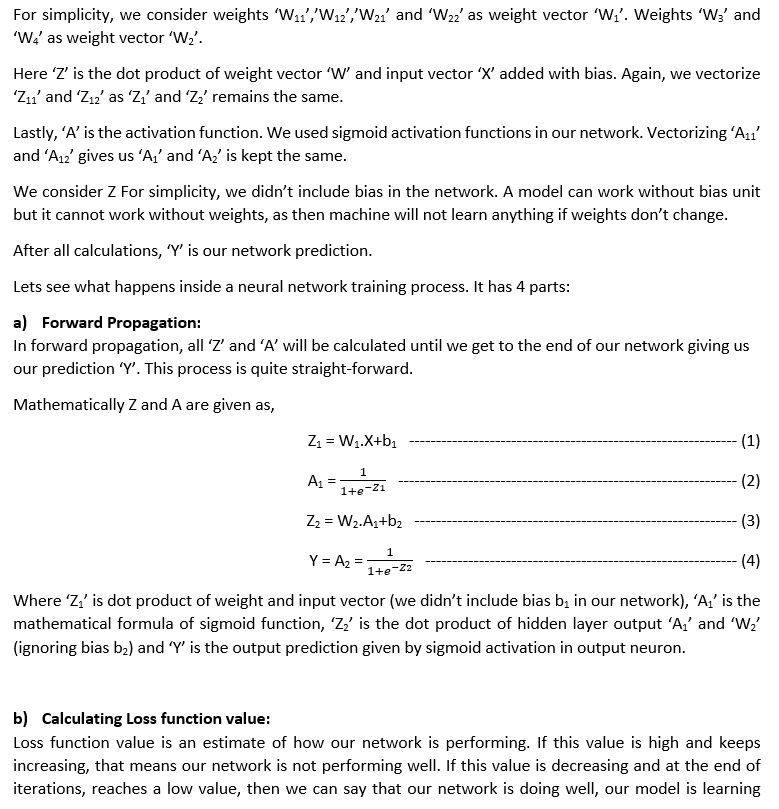

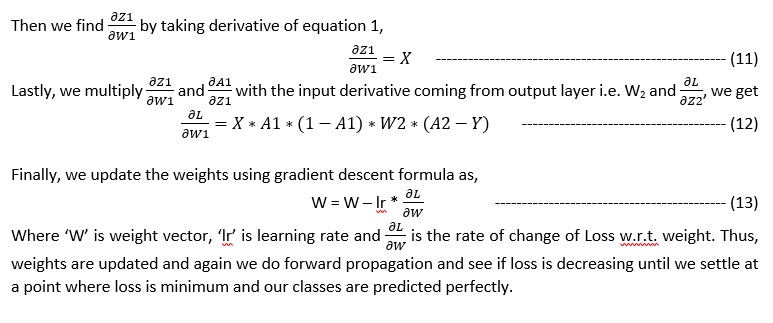

4 | 5 | In this project, a single hidden layer neural network is used, with sigmoid activation function in hidden layer units and sigmoid activation function for output layer too, since the output of XOR logic is binary i.e. 0 or 1 only one neuron is in the output layer. The maths behind neural network is explained below: |

5 | 6 |

|

6 | | - |

| 7 | +### Explanation of Maths behind Neural Network: |

| 8 | +Following work shows the maths behind a single hidden layer neural network: |

7 | 9 |

|

8 | | - |

| 10 | + |

9 | 11 |

|

10 | | - |

| 12 | + |

11 | 13 |

|

12 | | - |

| 14 | + |

| 15 | + |

| 16 | + |

| 17 | + |

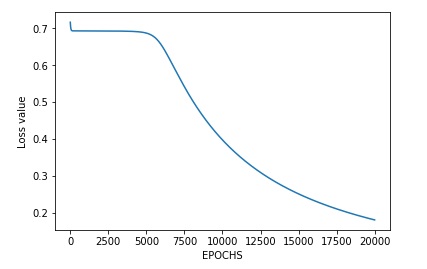

| 18 | +### Results |

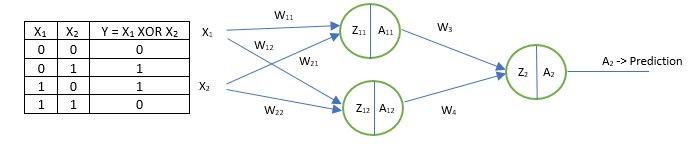

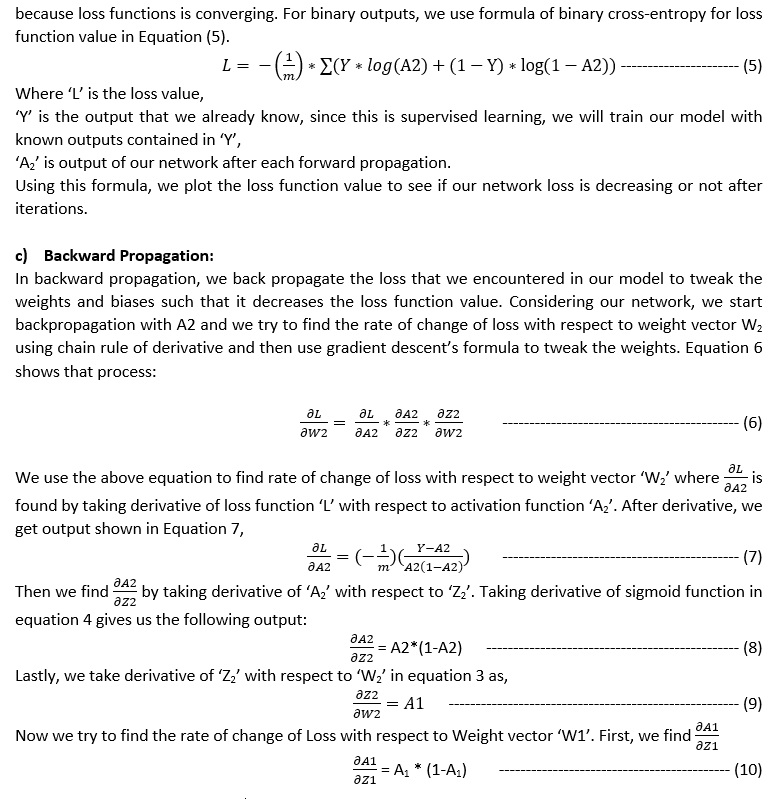

| 19 | +Following image shows the loss function for our network, it can be seen that it is decreasing. |

| 20 | + |

| 21 | + |

| 22 | + |

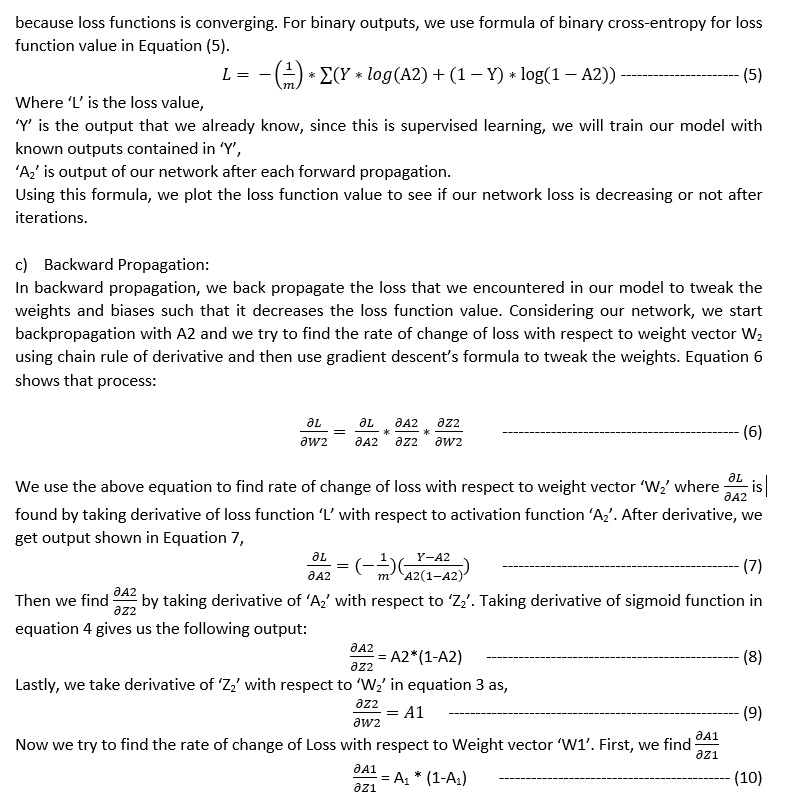

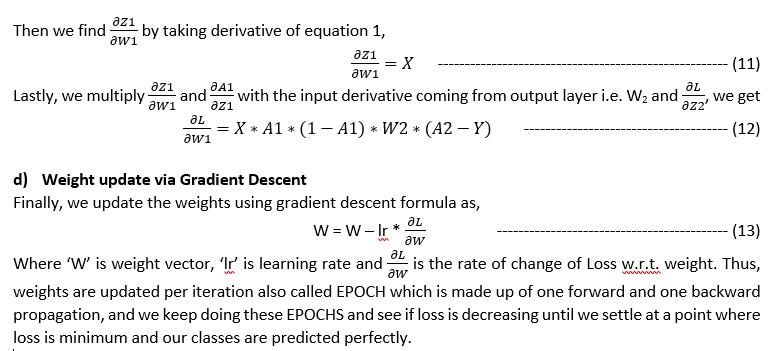

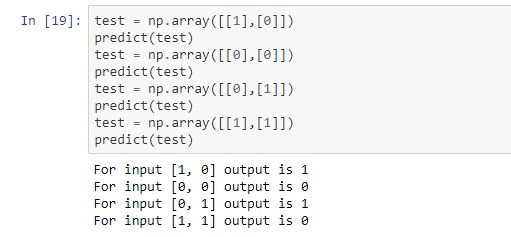

| 23 | +Following are the predictions of neural network on test inputs: |

| 24 | + |

| 25 | + |

13 | 26 |

|

14 | 27 | Please view the jupyter notebook file attached, it has the code with comments to make it easy to understand for the readers. |

0 commit comments