diff --git a/.gitignore b/.gitignore

index 2c7fb87c9f..5cc40b2da7 100644

--- a/.gitignore

+++ b/.gitignore

@@ -678,4 +678,4 @@ geoip/

test.py

Test/

reddit_tokens.json

-scriptcopy.py

+scriptcopy.py

\ No newline at end of file

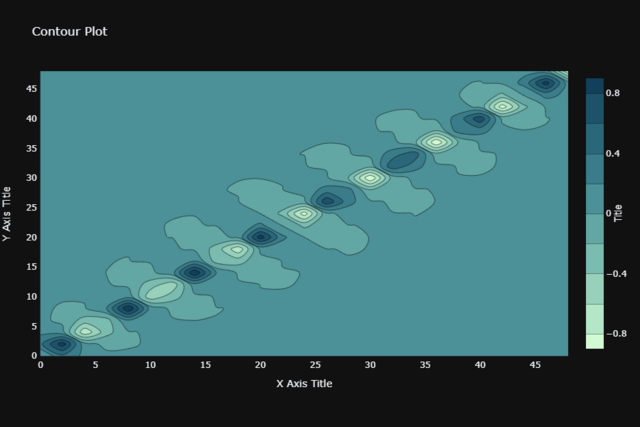

diff --git a/Data-Visualization/Contour Plots/contour_plot.py b/Data-Visualization/Contour Plots/contour_plot.py

new file mode 100644

index 0000000000..013fba2df1

--- /dev/null

+++ b/Data-Visualization/Contour Plots/contour_plot.py

@@ -0,0 +1,48 @@

+import plotly.graph_objects as go

+import numpy as np

+

+# X , Y , Z cordinates

+x_cord = np.arange(0, 50, 2)

+y_cord = np.arange(0, 50, 2)

+z_function = np.sin((x_cord + y_cord)/2)

+

+fig = go.Figure(data=go.Contour(x=x_cord,

+ y=y_cord,

+ z=z_function,

+ colorscale='darkmint',

+ contours=dict(

+ showlabels=False, # show labels on contours

+ labelfont=dict( # label font properties

+ size=12,

+ color='white',

+ )

+ ),

+ colorbar=dict(

+ thickness=25,

+ thicknessmode='pixels',

+ len=1.0,

+ lenmode='fraction',

+ outlinewidth=0,

+ title='Title',

+ titleside='right',

+ titlefont=dict(

+ size=14,

+ family='Arial, sans-serif')

+

+ ),

+

+ )

+ )

+

+fig.update_layout(

+ title='Contour Plot',

+ xaxis_title='X Axis Title',

+ yaxis_title='Y Axis Title',

+ autosize=False,

+ width=900,

+ height=600,

+ margin=dict(l=50, r=50, b=100, t=100, pad=4)

+)

+

+fig.layout.template = 'plotly_dark'

+fig.show()

diff --git a/Data-Visualization/Contour Plots/contourplot.png b/Data-Visualization/Contour Plots/contourplot.png

new file mode 100644

index 0000000000..8da11b39f5

Binary files /dev/null and b/Data-Visualization/Contour Plots/contourplot.png differ

diff --git a/Data-Visualization/FunnelChart/FunnelChart.png b/Data-Visualization/FunnelChart/FunnelChart.png

new file mode 100644

index 0000000000..833a1e1eee

Binary files /dev/null and b/Data-Visualization/FunnelChart/FunnelChart.png differ

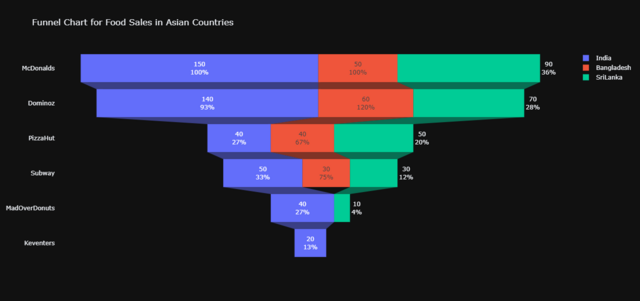

diff --git a/Data-Visualization/FunnelChart/FunnelCharts.py b/Data-Visualization/FunnelChart/FunnelCharts.py

new file mode 100644

index 0000000000..9db9d2a3c8

--- /dev/null

+++ b/Data-Visualization/FunnelChart/FunnelCharts.py

@@ -0,0 +1,33 @@

+from plotly import graph_objects as go

+

+fig = go.Figure()

+

+fig.add_trace(go.Funnel(

+ name = 'India',

+ y = ["McDonalds", "Dominoz", "PizzaHut", "Subway" , "MadOverDonuts" , "Keventers"],

+ x = [150, 140, 40, 50, 40 , 20],

+ textposition = "inside",

+ textinfo = "value+percent initial"))

+

+fig.add_trace(go.Funnel(

+ name = 'Bangladesh',

+ orientation = "h",

+ y = ["McDonalds", "Dominoz", "PizzaHut", "Subway"],

+ x = [50, 60, 40, 30],

+ textposition = "inside",

+ textinfo = "value+percent previous"))

+

+fig.add_trace(go.Funnel(

+ name = 'SriLanka',

+ orientation = "h",

+ y = ["McDonalds", "Dominoz", "PizzaHut", "Subway" ,"MadOverDonuts" ],

+ x = [90, 70, 50, 30, 10],

+ textposition = "outside",

+ textinfo = "value+percent total"))

+

+fig.update_layout(

+ title = "Funnel Chart for Food Sales in Asian Countries",

+ showlegend = True

+)

+fig.layout.template = 'plotly_dark'

+fig.show()

\ No newline at end of file

diff --git a/Data-Visualization/README.md b/Data-Visualization/README.md

index 7d26dfcf1d..d91d15f87e 100644

--- a/Data-Visualization/README.md

+++ b/Data-Visualization/README.md

@@ -35,3 +35,16 @@ pip install plotly

# Author

[Elita Menezes](https://github.com/ELITA04/)

+## 8. Contour Plot

+[](https://postimg.cc/1fqvZnVF)

+

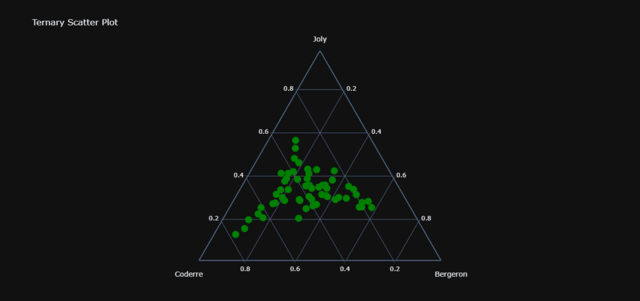

+## 9. Ternary Plot

+[](https://postimg.cc/8F14WZKd)

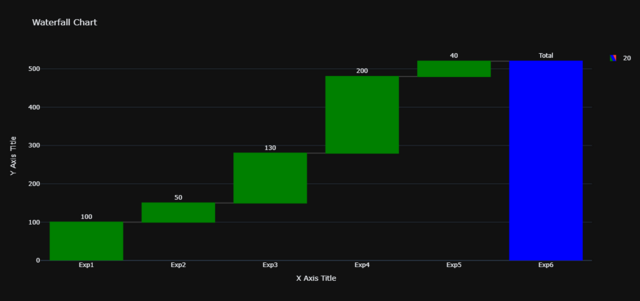

+## 10. Waterfall Chart

+[](https://postimg.cc/TpgJ4zBq)

+## 11. Funnel Chart

+[](https://postimg.cc/YGv1fZ1F)

+

+

+# Author

+[Divya Rao](https://github.com/dsrao711/)

\ No newline at end of file

diff --git a/Data-Visualization/TernaryPlots/TernaryPlot.py b/Data-Visualization/TernaryPlots/TernaryPlot.py

new file mode 100644

index 0000000000..0ece5038f9

--- /dev/null

+++ b/Data-Visualization/TernaryPlots/TernaryPlot.py

@@ -0,0 +1,30 @@

+import plotly.express as px

+import plotly.graph_objects as go

+

+df = px.data.election()

+

+fig = go.Figure(go.Scatterternary({

+ 'mode': 'markers',

+ 'a': df['Joly'],

+ 'b': df['Coderre'],

+ 'c': df['Bergeron'],

+ 'marker': {

+ 'color': 'green',

+ 'size': 14,

+ } ,

+

+}))

+fig.update_layout({

+ 'title': 'Ternary Scatter Plot',

+ 'ternary':

+ {

+ 'sum':1,

+ 'aaxis':{'title': 'Joly', 'min': 0.01, 'linewidth':2, 'ticks':'outside' },

+ 'baxis':{'title': 'Coderre', 'min': 0.01, 'linewidth':2, 'ticks':'outside' },

+ 'caxis':{'title': 'Bergeron', 'min': 0.01, 'linewidth':2, 'ticks':'outside' }

+ },

+ 'showlegend': False

+})

+

+fig.layout.template = 'plotly_dark'

+fig.show()

\ No newline at end of file

diff --git a/Data-Visualization/TernaryPlots/ternaryplot.png b/Data-Visualization/TernaryPlots/ternaryplot.png

new file mode 100644

index 0000000000..4ff6133ae3

Binary files /dev/null and b/Data-Visualization/TernaryPlots/ternaryplot.png differ

diff --git a/Data-Visualization/WaterfallChart/WaterFall.png b/Data-Visualization/WaterfallChart/WaterFall.png

new file mode 100644

index 0000000000..6b3f432803

Binary files /dev/null and b/Data-Visualization/WaterfallChart/WaterFall.png differ

diff --git a/Data-Visualization/WaterfallChart/Waterfall.py b/Data-Visualization/WaterfallChart/Waterfall.py

new file mode 100644

index 0000000000..f94ec9aa18

--- /dev/null

+++ b/Data-Visualization/WaterfallChart/Waterfall.py

@@ -0,0 +1,24 @@

+import plotly.express as px

+import plotly.graph_objects as go

+

+fig = go.Figure(go.Waterfall(

+ name = "20",

+ orientation = "v",

+ measure = ["relative", "relative", "relative", "relative", "relative", "total"],

+ x = ["Exp1", "Exp2", "Exp3", "Exp4", "Exp5", "Exp6"],

+ textposition = "outside",

+ text = ["100", "50", "130", "200", "40", "Total"],

+ y = [100, +50, 130, 200, 40, 0 ],

+ connector = {"line":{"color":"rgb(63, 63, 63)"}},

+ increasing = {"marker":{"color":"green"}},

+ totals = {"marker":{"color":"blue"}}

+))

+

+fig.update_layout(

+ title = "Waterfall Chart",

+ showlegend = True ,

+ xaxis_title='X Axis Title',

+ yaxis_title='Y Axis Title',

+)

+fig.layout.template = 'plotly_dark'

+fig.show()

\ No newline at end of file

diff --git a/Expense Tracker/Readme.md b/Expense Tracker/Readme.md

new file mode 100644

index 0000000000..1ed945f0b3

--- /dev/null

+++ b/Expense Tracker/Readme.md

@@ -0,0 +1,37 @@

+# Expense Tracker

+

+[](https://forthebadge.com)

+

+## Expense Tracker Functionalities : 🚀

+

+- This is used to store the daily expenses.

+- The daily expenses are stored in a format of date, items, and amount spent.

+- This also has a database which shows already stored expenses.

+

+## Expense Tracker Instructions: 👨🏻💻

+

+### Step 1:

+

+ Open Termnial 💻

+

+### Step 2:

+

+ Locate to the directory where python file is located 📂

+

+### Step 3:

+

+ Run the command: python script.py/python3 script.py 🧐

+

+### Step 4:

+

+ Sit back and Relax. Let the Script do the Job. ☕

+

+## Requirements

+

+ - tkinter

+ - tkcalendar

+ - sqlite3

+

+## Author

+

+ Amit Kumar Mishra

\ No newline at end of file

diff --git a/Expense Tracker/script.py b/Expense Tracker/script.py

new file mode 100644

index 0000000000..f2fb2ac74e

--- /dev/null

+++ b/Expense Tracker/script.py

@@ -0,0 +1,111 @@

+from tkinter import ttk

+from tkinter import messagebox

+from tkinter import *

+from tkinter.ttk import Notebook

+from tkcalendar import DateEntry

+import sqlite3

+

+

+def Addexpense():

+ x = Edate.get()

+ y = Item.get()

+ z = Eexpense.get()

+ data = [x,y,z]

+

+ with db:

+ c = db.cursor()

+ c.execute("INSERT INTO expense(Dates, Items, Expense) VALUES(?,?,?)",(x,y,z))

+

+

+

+def show():

+ x = Edate.get()

+ y = Item.get()

+ z = Eexpense.get()

+ data = [x,y,z]

+ connt=sqlite3.connect('./Expense Tracker/expense.db')

+ cursor=connt.cursor()

+ cursor.execute("SELECT * FROM expense")

+ for row in cursor.fetchall():

+ TVExpense.insert('','end',values=row)

+

+def delete():

+ with db:

+ dee = Delete.get()

+ c = db.cursor()

+ c.execute("DELETE FROM expense WHERE Items = ?", (dee,))

+ db.commit()

+ show()

+

+db = sqlite3.connect('./Expense Tracker/expense.db')

+c = db.cursor()

+

+c.execute("""CREATE TABLE IF NOT EXISTS expense(

+ Dates varchar,

+ Items varchar,

+ Expense integer

+)""")

+db.commit()

+

+gui = Tk()

+gui.title('Expense Tracker')

+gui.geometry('700x600')

+

+

+Tab = Notebook(gui)

+F1 = Frame(Tab, width=500, height=500)

+

+Tab.add(F1, text="Expense")

+

+

+Tab.pack(fill=BOTH, expand=1)

+

+ldate = ttk.Label(F1, text="Date", font=(None,18))

+ldate.grid(row=0, column=0, padx=5, pady=5, sticky='w')

+

+Edate = DateEntry(F1, width=19, background = 'blue', foreground='white', font=(None,18))

+Edate.grid(row=0, column=1, padx=5,pady=5, sticky='w')

+

+ltitle = ttk.Label(F1, text="Items",font=(None,18))

+ltitle.grid(row=1, column=0, padx=5, pady=5, sticky='w')

+

+Item = StringVar()

+

+Etitle = ttk.Entry(F1, textvariable=Item,font=(None,18))

+Etitle.grid(row=1, column=1, padx=5, pady=5, sticky='w')

+

+lexpense = ttk.Label(F1, text="Expense",font=(None,18))

+lexpense.grid(row=2, column=0, padx=5, pady=5, sticky='w')

+

+Expense = StringVar()

+

+Eexpense = ttk.Entry(F1, textvariable=Expense,font=(None,18))

+Eexpense.grid(row=2, column=1, padx=5, pady=5, sticky='w')

+

+btn = ttk.Button(F1,text='Add', command=Addexpense)

+btn.grid(row=3, column=1, padx=5, pady=5, sticky='w', ipadx=10, ipady=10)

+

+

+Ldel = ttk.Label(F1, text='Delete',font=(None,18))

+Ldel.grid(row=4, column=0, padx=5, pady=5, sticky='w')

+Delete = StringVar()

+

+dell = ttk.Entry(F1, textvariable=Delete,font=(None,18))

+dell.grid(row=4, column=1, padx=5, pady=5, sticky='w')

+

+btn2 = ttk.Button(F1,text='Delete', command=delete)

+btn2.grid(row=5, column=1, padx=5, pady=5, sticky='w', ipadx=10, ipady=10)

+

+btn1 = ttk.Button(F1,text='Show', command=show)

+btn1.grid(row=3, column=2, padx=5, pady=5, sticky='w', ipadx=10, ipady=10)

+

+TVList = ['Date','Item','Expense']

+TVExpense = ttk.Treeview(F1, column=TVList, show='headings', height=5)

+

+for i in TVList:

+ TVExpense.heading(i, text=i.title())

+

+TVExpense.grid(row=6, column=0, padx=5, pady=5, sticky='w', columnspan=3)

+

+gui.mainloop()

+db.close()

\ No newline at end of file

diff --git a/Fast Algorithm (Corner Detection)/Output.png b/Fast Algorithm (Corner Detection)/Output.png

index 08f2a16d8c..c59b1ae176 100644

Binary files a/Fast Algorithm (Corner Detection)/Output.png and b/Fast Algorithm (Corner Detection)/Output.png differ

diff --git a/Link-Preview/README.md b/Link-Preview/README.md

new file mode 100644

index 0000000000..2800795e1a

--- /dev/null

+++ b/Link-Preview/README.md

@@ -0,0 +1,33 @@

+# Link Preview

+

+A script to provide the user with a preview of the link entered.

+

+- When entered a link, the script will provide with title, description, and link of the website that the URL points to.

+- The script will do so by fetching the Html file for the link and analyzing the data from there.

+- The data will be saved in a `JSON` file named `db.json` for further reference

+- Every entry will have a time limit after which it will be updated (*Data expires after 7 days*)

+

+## Setup instructions

+

+Download the required packages from the following command in you terminal.(Make sure you're in the same project directory)

+

+```

+pip3 install -r requirements.txt

+```

+

+## Running the script:

+After installing all the requirements,run this command in your terminal.

+

+```

+python3 linkPreview.py

+```

+

+## Output

+

+The script will provide you with Title, Description, Image Link and URL.

+

+

+

+## Author(s)

+Hi, I'm [Madhav Jha](https://github.com/jhamadhav) author of this script 🙋♂️

+

diff --git a/Link-Preview/linkPreview.py b/Link-Preview/linkPreview.py

new file mode 100644

index 0000000000..629028e7ff

--- /dev/null

+++ b/Link-Preview/linkPreview.py

@@ -0,0 +1,143 @@

+import requests

+import json

+import os

+import time

+from bs4 import BeautifulSoup

+

+# to scrape title

+

+

+def getTitle(soup):

+ ogTitle = soup.find("meta", property="og:title")

+

+ twitterTitle = soup.find("meta", attrs={"name": "twitter:title"})

+

+ documentTitle = soup.find("title")

+ h1Title = soup.find("h1")

+ h2Title = soup.find("h2")

+ pTitle = soup.find("p")

+

+ res = ogTitle or twitterTitle or documentTitle or h1Title or h2Title or pTitle

+ res = res.get_text() or res.get("content", None)

+

+ if (len(res)> 60):

+ res = res[0:60]

+ if (res == None or len(res.split()) == 0):

+ res = "Not available"

+ return res.strip()

+

+# to scrape page description

+

+

+def getDesc(soup):

+ ogDesc = soup.find("meta", property="og:description")

+

+ twitterDesc = soup.find("meta", attrs={"name": "twitter:description"})

+

+ metaDesc = soup.find("meta", attrs={"name": "description"})

+

+ pDesc = soup.find("p")

+

+ res = ogDesc or twitterDesc or metaDesc or pDesc

+ res = res.get_text() or res.get("content", None)

+ if (len(res)> 60):

+ res = res[0:60]

+ if (res == None or len(res.split()) == 0):

+ res = "Not available"

+ return res.strip()

+

+# to scrape image link

+

+

+def getImage(soup, url):

+ ogImg = soup.find("meta", property="og:image")

+

+ twitterImg = soup.find("meta", attrs={"name": "twitter:image"})

+

+ metaImg = soup.find("link", attrs={"rel": "img_src"})

+

+ img = soup.find("img")

+

+ res = ogImg or twitterImg or metaImg or img

+ res = res.get("content", None) or res.get_text() or res.get("src", None)

+

+ count = 0

+ for i in range(0, len(res)):

+ if (res[i] == "." or res[i] == "/"):

+ count += 1

+ else:

+ break

+ res = res[count::]

+ if ((not res == None) and ((not "https://" in res) or (not "https://" in res))):

+ res = url + "/" + res

+ if (res == None or len(res.split()) == 0):

+ res = "Not available"

+

+ return res

+

+# print dictionary

+

+

+def printData(data):

+ for item in data.items():

+ print(f'{item[0].capitalize()}: {item[1]}')

+

+

+# start

+print("\n======================")

+print("- Link Preview -")

+print("======================\n")

+

+# get url from user

+url = input("Enter URL to preview : ")

+

+# parsing and checking the url

+if (url == ""):

+ url = 'www.girlscript.tech'

+if ((not "http://" in url) or (not "https://" in url)):

+ url = "https://" + url

+

+# printing values

+

+# first check in the DB

+db = {}

+# create file if it doesn't exist

+if not os.path.exists('Link-Preview/db.json'):

+ f = open('Link-Preview/db.json', "w")

+ f.write("{}")

+ f.close()

+

+# read db

+with open('Link-Preview/db.json', 'r+') as file:

+ data = file.read()

+ if (len(data) == 0):

+ data = "{}"

+ file.write(data)

+ db = json.loads(data)

+

+# check if it exists

+if (url in db and db[url]["time"] < round(time.time())): + printData(db[url]) +else: + # if not in db get via request + + # getting the html + r = requests.get(url) + soup = BeautifulSoup(r.text, "html.parser") + + sevenDaysInSec = 7*24*60*60 + # printing data + newData = { + "title": getTitle(soup), + "description": getDesc(soup), + "url": url, + "image": getImage(soup, url), + "time": round(time.time() * 1000) + sevenDaysInSec + } + printData(newData) + # parse file + db[url] = newData + with open('Link-Preview/db.json', 'w') as file: + json.dump(db, file) + +print("\n--END--\n") diff --git a/Link-Preview/requirements.txt b/Link-Preview/requirements.txt new file mode 100644 index 0000000000..cf54fa495a --- /dev/null +++ b/Link-Preview/requirements.txt @@ -0,0 +1,2 @@ +requests==2.25.1 +beautifulsoup4==4.9.3 diff --git a/ORB Algorithm/ORB_Algorithm.py b/ORB Algorithm/ORB_Algorithm.py new file mode 100644 index 0000000000..32a1ea4736 --- /dev/null +++ b/ORB Algorithm/ORB_Algorithm.py @@ -0,0 +1,60 @@ +import cv2 +import numpy as np + +# Load the image +path=input('Enter the path of the image: ') +image = cv2.imread(path) +path2=input('Enter the path for testing image: ') +test_image=cv2.imread(path2) + +#Resizing the image +image=cv2.resize(image,(600,600)) +test_image=cv2.resize(test_image,(600,600)) + +# Convert the image to gray scale +gray = cv2.cvtColor(image, cv2.COLOR_RGB2GRAY) +test_gray = cv2.cvtColor(test_image, cv2.COLOR_RGB2GRAY) + +#Display the given and test image +image_stack = np.concatenate((image, test_image), axis=1) +cv2.imshow('image VS test_image', image_stack) + +#Implementing the ORB alogorithm +orb = cv2.ORB_create() + +train_keypoints, train_descriptor = orb.detectAndCompute(gray, None) +test_keypoints, test_descriptor = orb.detectAndCompute(test_gray, None) + +keypoints = np.copy(image) + +cv2.drawKeypoints(image, train_keypoints, keypoints, color = (0, 255, 0)) + +# Display image with keypoints +cv2.imshow('keypoints',keypoints) +# Print the number of keypoints detected in the given image +print("Number of Keypoints Detected In The Image: ", len(train_keypoints)) + +# Create a Brute Force Matcher object. +bf = cv2.BFMatcher(cv2.NORM_HAMMING, crossCheck = True) + +# Perform the matching between the ORB descriptors of the training image and the test image +matches = bf.match(train_descriptor, test_descriptor) + +# The matches with shorter distance are the ones we want. +matches = sorted(matches, key = lambda x : x.distance) + +result = cv2.drawMatches(image, train_keypoints, test_image, test_keypoints, matches, test_gray, flags = 2) + +# Display the best matching points +cv2.imshow('result',result) + +#Naming the output image +image_name = path.split(r'/') +image_path = image_name[-1].split('.') +output = r"./ORB Algorithm/"+ image_path[0] + "(featureMatched).jpg" +cv2.imwrite(output,result) + +# Print total number of matching points between the training and query images +print("\nNumber of Matching Keypoints Between The input image and Test Image: ", len(matches)) +cv2.waitKey(0) +cv2.destroyAllWindows() diff --git a/ORB Algorithm/Readme.md b/ORB Algorithm/Readme.md new file mode 100644 index 0000000000..cc225889bd --- /dev/null +++ b/ORB Algorithm/Readme.md @@ -0,0 +1,16 @@ +## ORB Algorithm +In this script, we would use the **ORB(Oriented FAST Rotated Brief)** algorithm of `Open CV` for recognition and matching the features of image. + +ORB is a fusion of the FAST keypoint detector and BRIEF descriptor with some added features to improve the performance. FAST is Features from the Accelerated Segment +Test used to detect features from the provided image. It also uses a pyramid to produce multiscale features. Now it doesn’t compute the orientation and descriptors for +the features, so this is where BRIEF comes in the role. + +## Setup Instructions +- You need to install `OpenCV` and `Python` in your machine. + +## Output +

+

+

+## Author

+[Shubham Gupta](https://github.com/ShubhamGupta577)

diff --git a/Piglatin_Translator/README.md b/Piglatin_Translator/README.md

new file mode 100644

index 0000000000..eb826d7c81

--- /dev/null

+++ b/Piglatin_Translator/README.md

@@ -0,0 +1,20 @@

+# Pig Latin Translator

+A secret language formed from English by transferring the initial consonant or consonant cluster of each word to the end of the word and adding a vocalic syllable (usually /eɪ/): so pig Latin would be igpay atinlay

+

+## Rules

+1. If a word begins with a vowel, just as "yay" to the end. For example, "out" is translated into "outyay".

+2. If it begins with a consonant, then we take all consonants before the first vowel and we put them on the end of the word. For example, "which" is translated into "ichwhay".

+

+## How to run?

+

+ - Run the script ```python piglatin.py```

+ - Enter a Sentence you need to translate to pig latin

+

+ Example sentence: hello fellas my name is Archana

+

+## Output

+

+

+

+## Author

+[Archana Gandhi](https://github.com/archanagandhi)

diff --git a/Piglatin_Translator/piglatin.py b/Piglatin_Translator/piglatin.py

new file mode 100644

index 0000000000..199280755b

--- /dev/null

+++ b/Piglatin_Translator/piglatin.py

@@ -0,0 +1,22 @@

+def main():

+ lst = ['sh', 'gl', 'ch', 'ph', 'tr', 'br', 'fr', 'bl', 'gr', 'st', 'sl', 'cl', 'pl', 'fl']

+ sentence = input('ENTER THE SENTENCE: ')

+ sentence = sentence.split()

+ for k in range(len(sentence)):

+ i = sentence[k]

+ if i[0] in ['a', 'e', 'i', 'o', 'u']:

+ sentence[k] = i+'yay'

+ elif t(i) in lst:

+ sentence[k] = i[2:]+i[:2]+'ay'

+ elif i.isalpha() == False:

+ sentence[k] = i

+ else:

+ sentence[k] = i[1:]+i[0]+'ay'

+ return ' '.join(sentence)

+

+def t(str):

+ return str[0]+str[1]

+

+if __name__ == "__main__":

+ x = main()

+ print(x)

diff --git a/TODO (CLI-VER)/README.md b/TODO (CLI-VER)/README.md

new file mode 100644

index 0000000000..20ceb4565f

--- /dev/null

+++ b/TODO (CLI-VER)/README.md

@@ -0,0 +1,16 @@

+

+

Todo List CLI-VER

+List down your items in a Todolist so that you don't forget

+

+---------------------------------------------------------------------

+

+

+

+## How it works

+- If you don't have todolist.txt file in the current folder it will create one for you

+- You can add items to the list

+- You can delete items from the list

+- You can update items in the list

+- You can display Items as well

+

+#### By [Avishake Maji](https://github.com/Avishake007)

diff --git a/TODO (CLI-VER)/todolist.py b/TODO (CLI-VER)/todolist.py

new file mode 100644

index 0000000000..a808f0c6c7

--- /dev/null

+++ b/TODO (CLI-VER)/todolist.py

@@ -0,0 +1,113 @@

+#Check for the existence of file

+no_of_items=0

+try:

+ f=open("./TODO (CLI-VER)/todolist.txt")

+ p=0

+ for i in f.readlines():#Counting the number of items if the file exists already

+ p+=1

+ no_of_items=p-2

+except:

+ f=open("./TODO (CLI-VER)/todolist.txt",'w')

+ f.write("_________TODO LIST__________\n")

+ f.write(" TIME WORK")

+finally:

+ f.close()

+#Todo list

+print("Press 1: Add Item \nPress 2: Delete Item \nPress 3: Update item \nPress 4: Display Items\nPress 5: Exit")

+n=int(input())

+while n==1 or n==2 or n==3 or n==4:

+ if n==1:

+ todo=[]

+ print("Enter the time in HH:MM format(24 hours format)")

+ time=input()

+ print("Enter your Work")

+ work=input()

+ no_of_items+=1

+ with open('./TODO (CLI-VER)/todolist.txt','a') as f:

+ f.write("\n"+str(no_of_items)+" "+time+" "+work)

+ elif n==2:

+ if(no_of_items<=0): + print("There is no item in the list kindly add some items") + else: + print("____________________________________________________________") + print("Your Current List: ") + todo=[] + with open('./TODO (CLI-VER)/todolist.txt') as f: + for i in f.readlines(): + print(i) + todo.append(i) + print("____________________________________________________________") + print("Enter the position of the item you want to delete : ") + pos=int(input()) + if(pos<=0): + print("Please enter a valid position") + elif (pos>(no_of_items)):

+ print("Please enter the position <= {}".format(no_of_items)) + else: + + todo.pop(pos+1) + no_of_items-=1 + if(no_of_items<=0): + print("Congratulations your todo list is empty!") + + with open('./TODO (CLI-VER)/todolist.txt','w') as f: + for i in range(len(todo)): + if i>=(pos+1):

+ f.write(str(pos)+todo[i][1:])

+ pos+=1

+ else:

+ f.write(todo[i])

+

+ elif n==3:

+ print("____________________________________________________________")

+ print("Your Current List: ")

+ todo=[]

+ with open('./TODO (CLI-VER)/todolist.txt') as f:

+ for i in f.readlines():

+ print(i)

+ todo.append(i)

+ print("____________________________________________________________")

+ print("Enter the position of the items you want to update : ")

+ pos=int(input())

+ if(pos<=0): + print("Please enter a valid position") + elif (pos>(no_of_items)):

+ print("Please enter the position <= {}".format(no_of_items))

+ else:

+ print("What you want to update : ")

+ print("Press 1: Time\nPress 2: Work")

+ choice=int(input())

+ if choice==1:

+ print("Enter your updated time :")

+ time=input()

+ p=todo[pos+1].index(":")

+ y=0

+ with open('./TODO (CLI-VER)/todolist.txt','w') as f:

+ for i in range(len(todo)):

+ if i==pos+1:

+ f.write(str(pos)+" "+time+""+''.join(todo[pos+1][p+3:]))

+ else:

+ f.write(todo[i])

+ elif choice==2:

+ print("Enter your updated work :")

+ work=input()

+ p=todo[pos+1].index(":")

+ y=0

+ with open('./TODO (CLI-VER)/todolist.txt','w') as f:

+ for i in range(len(todo)):

+ if i==pos+1:

+ f.write(str(pos)+" "+''.join(todo[pos+1][p-2:p+3])+" "+work)

+ else:

+ f.write(todo[i])

+ elif n==4:

+ print("Your Current List: ")

+ todo=[]

+ print("____________________________________________________________")

+ with open('./TODO (CLI-VER)/todolist.txt') as f:

+ for i in f.readlines():

+ print(i)

+ todo.append(i)

+ print("____________________________________________________________")

+ print("Press 1: Add Item \nPress 2: Delete the Item\nPress 3: Update item\nPress 4:Display Items\nPress 5:Exit")

+ n=int(input())

+print("Thank you for using our application")

diff --git a/Twitter_Scraper_without_API/README.md b/Twitter_Scraper_without_API/README.md

new file mode 100644

index 0000000000..a0c2597b74

--- /dev/null

+++ b/Twitter_Scraper_without_API/README.md

@@ -0,0 +1,41 @@

+# Tweet hashtag based scraper without Twitter API

+

+- Here, we make use of snscrape to scrape tweets associated with a particular hashtag. Snscrape is a python library that scrapes twitter without the use of API keys.

+

+- We have 2 scripts associated with this project one to fetch tweets with snscrape and store it in the database (we use SQLite3), and the other script displays the tweets from the database.

+

+- Using snscrape, we are storing the hashtag, the tweet content, user id, as well as the URL of the tweets in the database.

+

+## Requirements

+

+Packages associated can be installed as:

+

+```sh

+ $ pip install -r requirements.txt

+```

+

+## Running the script

+

+For running the script which fetches tweets and other info associated with the hashtag and storing in the database:

+```sh

+ $ python fetch_hashtags.py

+```

+

+For running the script to display the tweet info stored in the database:

+```sh

+ $ python display_hashtags.py

+```

+

+## Working

+

+```fetch_hashtags.py``` will work as follows:

+

+

+

+```display_hashtags.py``` will work as follows:

+

+

+

+## Author

+

+[Rohini Rao](https://github.com/RohiniRG)

diff --git a/Twitter_Scraper_without_API/display_hashtags.py b/Twitter_Scraper_without_API/display_hashtags.py

new file mode 100644

index 0000000000..af93c025ff

--- /dev/null

+++ b/Twitter_Scraper_without_API/display_hashtags.py

@@ -0,0 +1,50 @@

+import sqlite3

+import os

+

+

+def sql_connection():

+ """

+ Establishes a connection to the SQL file database

+ :return connection object:

+ """

+ path = os.path.abspath('./Twitter_Scraper_without_API/TwitterDatabase.db')

+ con = sqlite3.connect(path)

+ return con

+

+

+def sql_fetcher(con):

+ """

+ Fetches all the tweets with the given hashtag from our database

+ :param con:

+ :return:

+ """

+ hashtag = input("\nEnter hashtag to search: #")

+ hashtag = '#' + hashtag

+ count = 0

+ cur = con.cursor()

+ cur.execute('SELECT * FROM tweets') # SQL search query

+ rows = cur.fetchall()

+

+ for r in rows:

+ if hashtag in r:

+ count += 1

+ print(f'USERNAME: {r[1]}\nTWEET CONTENT: {r[2]}\nURL: {r[3]}\n')

+

+ if count:

+ print(f'{count} tweets fetched from database')

+ else:

+ print('No tweets available for this hashtag')

+

+

+con = sql_connection()

+

+while 1:

+ sql_fetcher(con)

+

+ ans = input('Press (y) to continue or any other key to exit: ').lower()

+ if ans == 'y':

+ continue

+ else:

+ print('Exiting..')

+ break

+

diff --git a/Twitter_Scraper_without_API/fetch_hashtags.py b/Twitter_Scraper_without_API/fetch_hashtags.py

new file mode 100644

index 0000000000..d74b171039

--- /dev/null

+++ b/Twitter_Scraper_without_API/fetch_hashtags.py

@@ -0,0 +1,66 @@

+import snscrape.modules.twitter as sntweets

+import sqlite3

+

+

+def sql_connection():

+ """

+ Establishes a connection to the SQL file database

+ :return connection object:

+ """

+ con = sqlite3.connect('./Twitter_Scraper_without_API/TwitterDatabase.db')

+ return con

+

+

+def sql_table(con):

+ """

+ Creates a table in the database (if it does not exist already)

+ to store the tweet info

+ :param con:

+ :return:

+ """

+ cur = con.cursor()

+ cur.execute("CREATE TABLE IF NOT EXISTS tweets(HASHTAG text, USERNAME text,"

+ " CONTENT text, URL text)")

+ con.commit()

+

+

+def sql_insert_table(con, entities):

+ """

+ Inserts the desired data into the table to store tweet info

+ :param con:

+ :param entities:

+ :return:

+ """

+ cur = con.cursor()

+ cur.execute('INSERT INTO tweets(HASHTAG, USERNAME, CONTENT, '

+ 'URL) VALUES(?, ?, ?, ?)', entities)

+ con.commit()

+

+

+con = sql_connection()

+sql_table(con)

+

+while 1:

+ tag = input('\n\nEnter a hashtag: #')

+ max_count = int(input('Enter maximum number of tweets to be listed: '))

+

+ count = 0

+ # snscrape uses the given string of hashtag to find the desired amount of

+ # tweets and associated info

+ for i in sntweets.TwitterSearchScraper('#' + tag).get_items():

+ count += 1

+ entities = ('#'+tag, i.username, i.content, i.url)

+ sql_insert_table(con, entities)

+

+ if count == max_count:

+ break

+

+ print('Done!')

+

+ ans = input('Press (y) to continue or any other key to exit: ').lower()

+ if ans == 'y':

+ continue

+ else:

+ print('Exiting..')

+ break

+

diff --git a/Twitter_Scraper_without_API/requirements.txt b/Twitter_Scraper_without_API/requirements.txt

new file mode 100644

index 0000000000..1b4c762254

--- /dev/null

+++ b/Twitter_Scraper_without_API/requirements.txt

@@ -0,0 +1,10 @@

+beautifulsoup4==4.9.3

+certifi==2020.12.5

+chardet==4.0.0

+idna==2.10

+lxml==4.6.2

+PySocks==1.7.1

+requests==2.25.1

+snscrape==0.3.4

+soupsieve==2.2

+urllib3==1.26.4