- * jdk8中:

- * -XX:MetaspaceSize=10m -XX:MaxMetaspaceSize=10m

- *

- * @author IceBlue

- * @create 2020 22:24

- */

-public class OOMTest extends ClassLoader {

- public static void main(String[] args) {

- int j = 0;

- try {

- for (int i = 0; i < 100000; i++) { - OOMTest test = new OOMTest(); - //创建ClassWriter对象,用于生成类的二进制字节码 - ClassWriter classWriter = new ClassWriter(0); - //指明版本号,修饰符,类名,包名,父类,接口 - classWriter.visit(Opcodes.V1_8, Opcodes.ACC_PUBLIC, "Class" + i, null, "java/lang/Object", null); - //返回byte[] - byte[] code = classWriter.toByteArray(); - //类的加载 - test.defineClass("Class" + i, code, 0, code.length);//Class对象 - test = null; - j++; - } - } finally { - System.out.println(j); - } - } -} -``` - -输出结果: - -``` -[GC (Metadata GC Threshold) [PSYoungGen: 10485K->1544K(152576K)] 10485K->1552K(500736K), 0.0011517 secs] [Times: user=0.00 sys=0.00, real=0.00 secs]

-[Full GC (Metadata GC Threshold) [PSYoungGen: 1544K->0K(152576K)] [ParOldGen: 8K->658K(236544K)] 1552K->658K(389120K), [Metaspace: 3923K->3320K(1056768K)], 0.0051012 secs] [Times: user=0.00 sys=0.00, real=0.01 secs]

-[GC (Metadata GC Threshold) [PSYoungGen: 5243K->832K(152576K)] 5902K->1490K(389120K), 0.0009536 secs] [Times: user=0.00 sys=0.00, real=0.00 secs]

-

--------省略N行-------

-

-[Full GC (Last ditch collection) [PSYoungGen: 0K->0K(2427904K)] [ParOldGen: 824K->824K(5568000K)] 824K->824K(7995904K), [Metaspace: 3655K->3655K(1056768K)], 0.0041177 secs] [Times: user=0.00 sys=0.00, real=0.00 secs]

-Heap

- PSYoungGen total 2427904K, used 0K [0x0000000755f80000, 0x00000007ef080000, 0x00000007ffe00000)

- eden space 2426880K, 0% used [0x0000000755f80000,0x0000000755f80000,0x00000007ea180000)

- from space 1024K, 0% used [0x00000007ea180000,0x00000007ea180000,0x00000007ea280000)

- to space 1536K, 0% used [0x00000007eef00000,0x00000007eef00000,0x00000007ef080000)

- ParOldGen total 5568000K, used 824K [0x0000000602200000, 0x0000000755f80000, 0x0000000755f80000)

- object space 5568000K, 0% used [0x0000000602200000,0x00000006022ce328,0x0000000755f80000)

- Metaspace used 3655K, capacity 4508K, committed 9728K, reserved 1056768K

- class space used 394K, capacity 396K, committed 2048K, reserved 1048576K

-

-进程已结束,退出代码0

-

-```

-

-通过不断地动态生成类对象,输出GC日志

-

-根据GC日志我们可以看出当元空间容量耗尽时,会触发FullGC,而每次FullGC之前,至会进行一次MinorGC,而MinorGC只会回收新生代空间;

-

-只有在FullGC时,才会对新生代,老年代,永久代/元空间全部进行垃圾收集

\ No newline at end of file

diff --git "a/JDK/Java8347円274円226円347円250円213円346円234円200円344円275円263円345円256円236円350円267円265円/344円270円200円346円226円207円346円220円236円346円207円202円Java347円232円204円SPI346円234円272円345円210円266円.md" "b/JDK/Java8347円274円226円347円250円213円346円234円200円344円275円263円345円256円236円350円267円265円/344円270円200円346円226円207円346円220円236円346円207円202円Java347円232円204円SPI346円234円272円345円210円266円.md"

deleted file mode 100644

index 1c69b61c1e..0000000000

--- "a/JDK/Java8347円274円226円347円250円213円346円234円200円344円275円263円345円256円236円350円267円265円/344円270円200円346円226円207円346円220円236円346円207円202円Java347円232円204円SPI346円234円272円345円210円266円.md"

+++ /dev/null

@@ -1,63 +0,0 @@

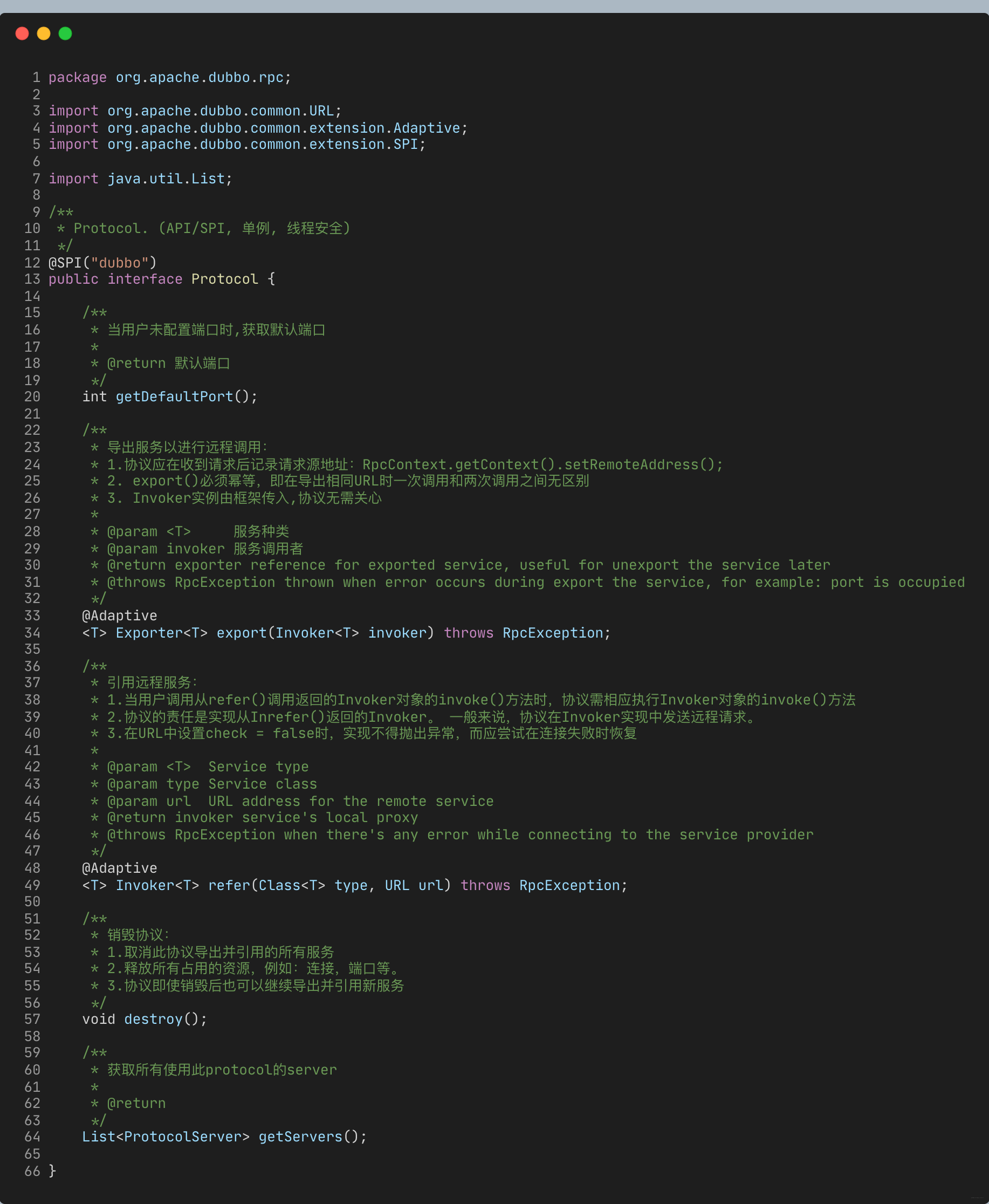

-# 1 简介

-SPI,Service Provider Interface,一种服务发现机制。

-

-有了SPI,即可实现服务接口与服务实现的解耦:

-- 服务提供者(如 springboot starter)提供出 SPI 接口。身为服务提供者,在你无法形成绝对规范强制时,适度"放权" 比较明智,适当让客户端去自定义实现

-- 客户端(普通的 springboot 项目)即可通过本地注册的形式,将实现类注册到服务端,轻松实现可插拔

-

-## 缺点

-- 不能按需加载。虽然 ServiceLoader 做了延迟加载,但是只能通过遍历的方式全部获取。如果其中某些实现类很耗时,而且你也不需要加载它,那么就形成了资源浪费

-- 获取某个实现类的方式不够灵活,只能通过迭代器的形式获取

-

-> Dubbo SPI 实现方式对以上两点进行了业务优化。

-

-# 源码

-

-应用程序通过迭代器接口获取对象实例,这里首先会判断 providers 对象中是否有实例对象:

-- 有实例,那么就返回

-- 没有,执行类的装载步骤,具体类装载实现如下:

-

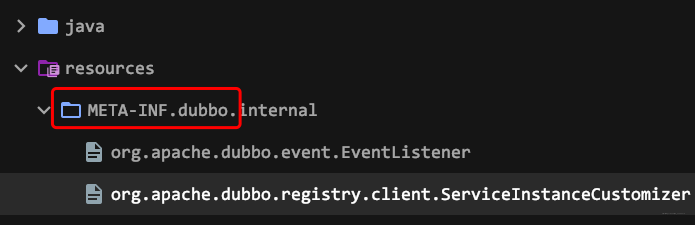

-LazyIterator#hasNextService 读取 META-INF/services 下的配置文件,获得所有能被实例化的类的名称,并完成 SPI 配置文件的解析

-

-

-LazyIterator#nextService 负责实例化 hasNextService() 读到的实现类,并将实例化后的对象存放到 providers 集合中缓存

-

-# 使用

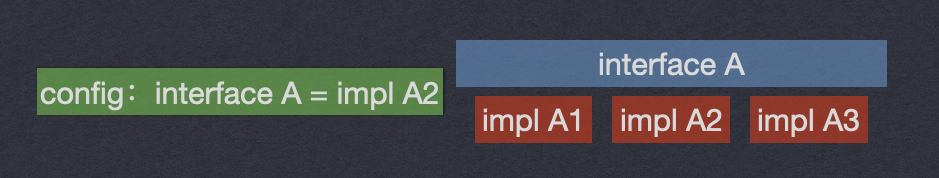

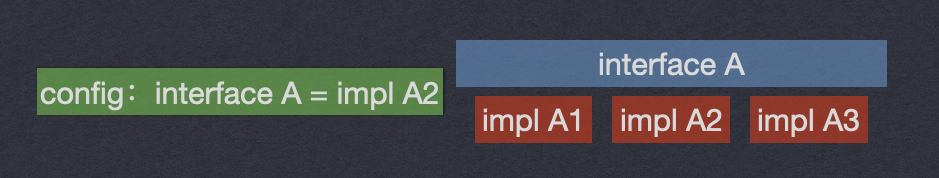

-如某接口有3个实现类,那系统运行时,该接口到底选择哪个实现类呢?

-这时就需要SPI,**根据指定或默认配置,找到对应实现类,加载进来,然后使用该实现类实例**。

-

-如下系统运行时,加载配置,用实现A2实例化一个对象来提供服务:

-

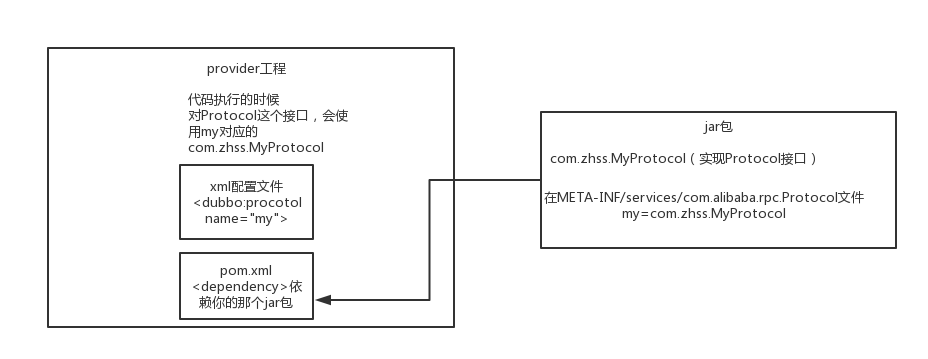

-再如,你要通过jar包给某个接口提供实现,就在自己jar包的`META-INF/services/`目录下放一个接口同名文件,指定接口的实现是自己这个jar包里的某类即可:

-

-别人用这个接口,然后用你的jar包,就会在运行时通过你的jar包指定文件找到这个接口该用哪个实现类。这是JDK内置提供的功能。

-

-> 我就不定义在 META-INF/services 下面行不行?就想定义在别的地方可以吗?

-

-No!JDK 已经规定好配置路径,你若随便定义,类加载器可就不知道去哪里加载了

-

-假设你有个工程P,有个接口A,A在P无实现类,系统运行时怎么给A选实现类呢?

-可以自己搞个jar包,`META-INF/services/`,放上一个文件,文件名即接口名,接口A的实现类=`com.javaedge.service.实现类A2`。

-让P来依赖你的jar包,等系统运行时,P跑起来了,对于接口A,就会扫描依赖的jar包,看看有没有`META-INF/services`文件夹:

-- 有,再看看有无名为接口A的文件:

- - 有,在里面查找指定的接口A的实现是你的jar包里的哪个类即可

-# 适用场景

-## 插件扩展

-比如你开发了一个开源框架,若你想让别人自己写个插件,安排到你的开源框架里中,扩展功能时。

-

-如JDBC。Java定义了一套JDBC的接口,但并未提供具体实现类,而是在不同云厂商提供的数据库实现包。

-> 但项目运行时,要使用JDBC接口的哪些实现类呢?

-

-一般要根据自己使用的数据库驱动jar包,比如我们最常用的MySQL,其`mysql-jdbc-connector.jar` 里面就有:

-

-系统运行时碰到你使用JDBC的接口,就会在底层使用你引入的那个jar中提供的实现类。

-## 案例

-如sharding-jdbc 数据加密模块,本身支持 AES 和 MD5 两种加密方式。但若客户端不想用内置的两种加密,偏偏想用 RSA 算法呢?难道每加一种算法,sharding-jdbc 就要发个版本?

-

-sharding-jdbc 可不会这么蠢,首先提供出 EncryptAlgorithm 加密算法接口,并引入 SPI 机制,做到服务接口与服务实现分离的效果。

-客户端想要使用自定义加密算法,只需在客户端项目 `META-INF/services` 的路径下定义接口的全限定名称文件,并在文件内写上加密实现类的全限定名

-

-

-这就显示了SPI的优点:

-- 客户端(自己的项目)提供了服务端(sharding-jdbc)的接口自定义实现,但是与服务端状态分离,只有在客户端提供了自定义接口实现时才会加载,其它并没有关联;客户端的新增或删除实现类不会影响服务端

-- 如果客户端不想要 RSA 算法,又想要使用内置的 AES 算法,那么可以随时删掉实现类,可扩展性强,插件化架构

\ No newline at end of file

diff --git "a/JDK/345円271円266円345円217円221円347円274円226円347円250円213円/Java345円271円266円345円217円221円347円274円226円347円250円213円345円256円236円346円210円230円/Java345円271円266円345円217円221円347円274円226円347円250円213円345円256円236円346円210円230円347円263円273円345円210円227円(15)-345円216円237円345円255円220円351円201円215円345円216円206円344円270円216円351円235円236円351円230円273円345円241円236円345円220円214円346円255円245円346円234円272円345円210円266円.md" "b/JDK/345円271円266円345円217円221円347円274円226円347円250円213円/Java345円271円266円345円217円221円347円274円226円347円250円213円345円256円236円346円210円230円/Java345円271円266円345円217円221円347円274円226円347円250円213円345円256円236円346円210円230円347円263円273円345円210円22715円344円271円213円345円216円237円345円255円220円351円201円215円345円216円206円344円270円216円351円235円236円351円230円273円345円241円236円345円220円214円346円255円245円346円234円272円345円210円266円(Atomic-Variables-and-Non-blocking-Synchron.md"

similarity index 68%

rename from "JDK/345円271円266円345円217円221円347円274円226円347円250円213円/Java345円271円266円345円217円221円347円274円226円347円250円213円345円256円236円346円210円230円/Java345円271円266円345円217円221円347円274円226円347円250円213円345円256円236円346円210円230円347円263円273円345円210円227円(15)-345円216円237円345円255円220円351円201円215円345円216円206円344円270円216円351円235円236円351円230円273円345円241円236円345円220円214円346円255円245円346円234円272円345円210円266円.md"

rename to "JDK/345円271円266円345円217円221円347円274円226円347円250円213円/Java345円271円266円345円217円221円347円274円226円347円250円213円345円256円236円346円210円230円/Java345円271円266円345円217円221円347円274円226円347円250円213円345円256円236円346円210円230円347円263円273円345円210円22715円344円271円213円345円216円237円345円255円220円351円201円215円345円216円206円344円270円216円351円235円236円351円230円273円345円241円236円345円220円214円346円255円245円346円234円272円345円210円266円(Atomic-Variables-and-Non-blocking-Synchron.md"

index 5aece1fb28..13eabf7add 100644

--- "a/JDK/345円271円266円345円217円221円347円274円226円347円250円213円/Java345円271円266円345円217円221円347円274円226円347円250円213円345円256円236円346円210円230円/Java345円271円266円345円217円221円347円274円226円347円250円213円345円256円236円346円210円230円347円263円273円345円210円227円(15)-345円216円237円345円255円220円351円201円215円345円216円206円344円270円216円351円235円236円351円230円273円345円241円236円345円220円214円346円255円245円346円234円272円345円210円266円.md"

+++ "b/JDK/345円271円266円345円217円221円347円274円226円347円250円213円/Java345円271円266円345円217円221円347円274円226円347円250円213円345円256円236円346円210円230円/Java345円271円266円345円217円221円347円274円226円347円250円213円345円256円236円346円210円230円347円263円273円345円210円22715円344円271円213円345円216円237円345円255円220円351円201円215円345円216円206円344円270円216円351円235円236円351円230円273円345円241円236円345円220円214円346円255円245円346円234円272円345円210円266円(Atomic-Variables-and-Non-blocking-Synchron.md"

@@ -1,30 +1,32 @@

-非阻塞算法,用底层的原子机器指令代替锁,确保数据在并发访问中的一致性。

-非阻塞算法被广泛应用于OS和JVM中实现线程/进程调度机制和GC及锁,并发数据结构中。

+近年并发算法领域大多数研究都侧重非阻塞算法,这种算法用底层的原子机器指令代替锁来确保数据在并发访问中的一致性,非阻塞算法被广泛应用于OS和JVM中实现线程/进程调度机制和GC以及锁,并发数据结构中。

+

+与锁的方案相比,非阻塞算法都要复杂的多,他们在可伸缩性和活跃性上(避免死锁)都有巨大优势。

-与锁相比,非阻塞算法复杂的多,在可伸缩性和活跃性上(避免死锁)有巨大优势。

非阻塞算法,即多个线程竞争相同的数据时不会发生阻塞,因此能更细粒度的层次上进行协调,而且极大减少调度开销。

# 1 锁的劣势

独占,可见性是锁要保证的。

-许多JVM都对非竞争的锁获取和释放做了很多优化,性能很不错。

-但若一些线程被挂起然后稍后恢复运行,当线程恢复后还得等待其他线程执行完他们的时间片,才能被调度,所以挂起和恢复线程存在很大开销。

-其实很多锁的粒度很小,很简单,若锁上存在激烈竞争,那么 调度开销/工作开销 比值就会非常高,降低业务吞吐量。

+许多JVM都对非竞争的锁获取和释放做了很多优化,性能很不错了。

+

+但是如果一些线程被挂起然后稍后恢复运行,当线程恢复后还得等待其他线程执行完他们的时间片,才能被调度,所以挂起和恢复线程存在很大的开销,其实很多锁的力度很小的,很简单,如果锁上存在着激烈的竞争,那么多调度开销/工作开销比值就会非常高。

+

+与锁相比volatile是一种更轻量的同步机制,因为使用volatile不会发生上下文切换或者线程调度操作,但是volatile的指明问题就是虽然保证了可见性,但是原子性无法保证,比如i++的字节码就是N行。

-而与锁相比,volatile是一种更轻量的同步机制,因为使用volatile不会发生上下文切换或线程调度操作,但volatile的指明问题就是虽然保证了可见性,但是原子性无法保证。

+如果一个线程正在等待锁,它不能做任何事情,如果一个线程在持有锁的情况下呗延迟执行了,例如发生了缺页错误,调度延迟,那么就没法执行。如果被阻塞的线程优先级较高,那么就会出现priority invesion的问题,被永久的阻塞下去。

-- 若一个线程正在等待锁,它不能做任何事情

-- 若一个线程在持有锁情况下被延迟执行了,如发生缺页错误,调度延迟,就没法执行

-- 若被阻塞的线程优先级较高,就会出现priority invesion问题,被永久阻塞

# 2 硬件对并发的支持

-独占锁是悲观锁,对细粒度的操作,更高效的应用是乐观锁,这种方法需要借助**冲突监测机制,来判断更新过程中是否存在来自其他线程的干扰,若存在,则失败重试**。

-几乎所有现代CPU都有某种形式的原子读-改-写指令,如compare-and-swap等,JVM就是使用这些指令来实现无锁并发。

+独占锁是悲观所,对于细粒度的操作,更高效的应用是乐观锁,这种方法需要借助**冲突监测机制来判断更新过程中是否存在来自其他线程的干扰,如果存在则失败重试**。

+

+几乎所有的现代CPU都有某种形式的原子读-改-写指令,例如compare-and-swap等,JVM就是使用这些指令来实现无锁并发。

+

## 2.1 比较并交换

+

CAS(Compare and set)乐观的技术。Java实现的一个compare and set如下,这是一个模拟底层的示例:

+

```java

@ThreadSafe

public class SimulatedCAS {

-

@GuardedBy("this") private int value;

public synchronized int get() {

@@ -45,7 +47,9 @@ public class SimulatedCAS {

== compareAndSwap(expectedValue, newValue));

}

}

+

```

+

## 2.2 非阻塞的计数器

```java

public class CasCounter {

@@ -63,12 +67,14 @@ public class CasCounter {

return v + 1;

}

}

+

```

Java中使用AtomicInteger。

竞争激烈一般时,CAS性能远超基于锁的计数器。看起来他的指令更多,但无需上下文切换和线程挂起,JVM内部的代码路径实际很长,所以反而好些。

-但激烈程度较高时,开销还是较大,但会发生这种激烈程度非常高的情况只是理论,实际生产环境很难遇到。况且JIT很聪明,这种操作往往能非常大的优化。

+但激烈程度较高时,它的开销还是较大,但是你会发生这种激烈程度非常高的情况只是理论,实际生产环境很难遇到。

+况且JIT很聪明,这种操作往往能非常大的优化。

为确保正常更新,可能得将CAS操作放到for循环,从语法结构看,使用**CAS**比使用锁更加复杂,得考虑失败情况(锁会挂起线程,直到恢复)。

但基于**CAS**的原子操作,性能基本超过基于锁的计数器,即使只有很小的竞争或不存在竞争!

@@ -76,19 +82,37 @@ Java中使用AtomicInteger。

在轻度到中度争用情况下,非阻塞算法的性能会超越阻塞算法,因为 CAS 的多数时间都在第一次尝试时就成功,而发生争用时的开销也不涉及**线程挂起**和**上下文切换**,只多了几个循环迭代。

没有争用的 CAS 要比没有争用的锁轻量得多(因为没有争用的锁涉及 CAS 加上额外的处理,加锁至少需要一个CAS,在有竞争的情况下,需要操作队列,线程挂起,上下文切换),而争用的 CAS 比争用的锁获取涉及更短的延迟。

-CAS的缺点是,它使用调用者来处理竞争问题,通过重试、回退、放弃,而锁能自动处理竞争问题,例如阻塞。

+CAS的缺点是它使用调用者来处理竞争问题,通过重试、回退、放弃,而锁能自动处理竞争问题,例如阻塞。

-原子变量可看做更好的volatile类型变量。AtomicInteger在JDK8里面做了改动。

-

+原子变量可以看做更好的volatile类型变量。

+AtomicInteger在JDK8里面做了改动。

+```java

+public final int getAndIncrement() {

+ return unsafe.getAndAddInt(this, valueOffset, 1);

+}

+

+```

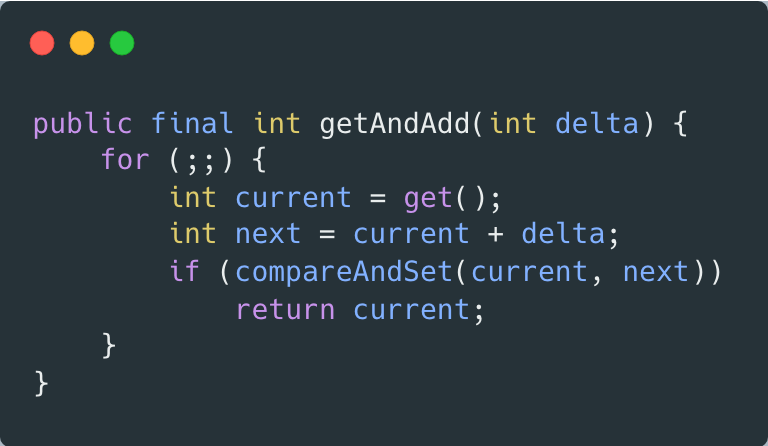

JDK7里面的实现如下:

-

-Unsafe是经过特殊处理的,不能理解成常规的Java代码,1.8在调用getAndAddInt时,若系统底层:

-- 支持fetch-and-add,则执行的就是native方法,使用fetch-and-add

-- 不支持,就按照上面getAndAddInt那样,以Java代码方式执行,使用compare-and-swap

+```java

+public final int getAndAdd(int delta) {

+ for(;;) {

+ intcurrent= get();

+ intnext=current+delta;

+ if(compareAndSet(current,next))

+ returncurrent;

+ }

+ }

+

+```

+Unsafe是经过特殊处理的,不能理解成常规的Java代码,区别在于:

+- 1.8在调用getAndAddInt的时候,如果系统底层支持fetch-and-add,那么它执行的就是native方法,使用的是fetch-and-add

+- 如果不支持,就按照上面的所看到的getAndAddInt方法体那样,以java代码的方式去执行,使用的是compare-and-swap

这也正好跟openjdk8中Unsafe::getAndAddInt上方的注释相吻合:

-以下包含在不支持本机指令的平台上使用的基于 CAS 的 Java 实现

-

+```java

+// The following contain CAS-based Java implementations used on

+// platforms not supporting native instructions

+```

# 3 原子变量类

J.U.C的AtomicXXX。

@@ -140,11 +164,18 @@ public class CasNumberRange {

}

}

}

+

```

+

+

# 4 非阻塞算法

+

Lock-free算法,可以实现栈、队列、优先队列或者散列表。

+

## 4.1 非阻塞的栈

-Trebier算法,1986年提出。

+

+Trebier算法,1986年提出的。

+

```java

public class ConcurrentStack

-## 4 目录结构

+## 4 目录结构(不断优化中)

| 数据结构与算法 | 操作系统 | 网络 | 面向对象 | 数据存储 | Java | 架构设计 | 框架 | 编程规范 | 职业规划 |

| :--------: | :---------: | :---------: | :---------: | :---------: | :---------:| :---------: | :-------: | :-------:| :------:|

@@ -83,6 +93,15 @@

### :memo: 职业规划

+## QQ 技术交流群

+

+为大家提供一个学习交流平台,在这里你可以自由地讨论技术问题。

+

+

+

+## 微信交流群

+

+

### 本人微信

@@ -95,17 +114,4 @@

### 绘图工具

- [draw.io](https://www.draw.io/)

-- keynote

-

-再分享我整理汇总的一些 Java 面试相关资料(亲自验证,严谨科学!别再看网上误导人的垃圾面试题!!!),助你拿到更多 offer!

-

-

-

-[点击获取更多经典必读电子书!](https://mp.weixin.qq.com/s?__biz=MzUzNTY5MzA3MQ==&mid=2247497273&idx=1&sn=b0f1e2e03cd7de3ce5d93cc8793d6d88&chksm=fa832459cdf4ad4fb046c0beb7e87ecea48f338278846679ef65238af45f0a135720e7061002&token=766333302&lang=zh_CN#rd)

-

-2023年最新Java学习路线一条龙:

-

-[](https://www.nowcoder.com/discuss/353159357007339520?sourceSSR=users)

-

-

-再给大家推荐一个学习 前后端软件开发 和准备Java 面试的公众号[【JavaEdge】](https://mp.weixin.qq.com/s?__biz=MzUzNTY5MzA3MQ==&mid=2247498257&idx=1&sn=b09d88691f9bfd715e000b69ef61227e&chksm=fa832871cdf4a1675d4491727399088ca488fa13e0a3cdf2ece3012265e5a3ef273dff540879&token=766333302&lang=zh_CN#rd)(强烈推荐!)

+- keynote

\ No newline at end of file

diff --git "a/Spring/Spring RestTemplate344円270円272円344円275円225円345円277円205円351円241円273円346円220円255円351円205円215円MultiValueMap357円274円237円.md" "b/Spring/Spring RestTemplate344円270円272円344円275円225円345円277円205円351円241円273円346円220円255円351円205円215円MultiValueMap357円274円237円.md"

deleted file mode 100644

index 0281c13f2c..0000000000

--- "a/Spring/Spring RestTemplate344円270円272円344円275円225円345円277円205円351円241円273円346円220円255円351円205円215円MultiValueMap357円274円237円.md"

+++ /dev/null

@@ -1,53 +0,0 @@

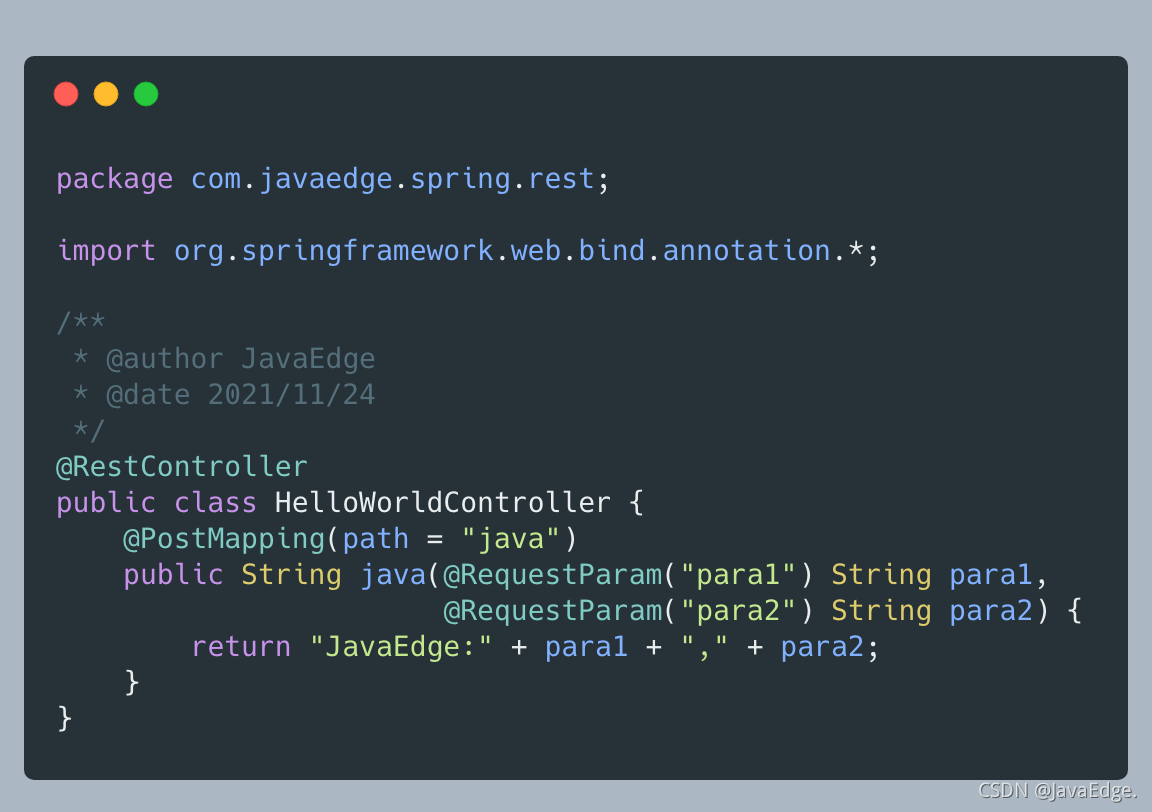

-微服务之间的大多都是使用 HTTP 通信,这自然少不了使用 HttpClient。

-在不适用 Spring 前,一般使用 Apache HttpClient 和 Ok HttpClient 等,而一旦引入 Spring,就有了更好选择 - RestTemplate。

-

-接口:

-

-想接受一个 Form 表单请求,读取表单定义的两个参数 para1 和 para2,然后作为响应返回给客户端。

-

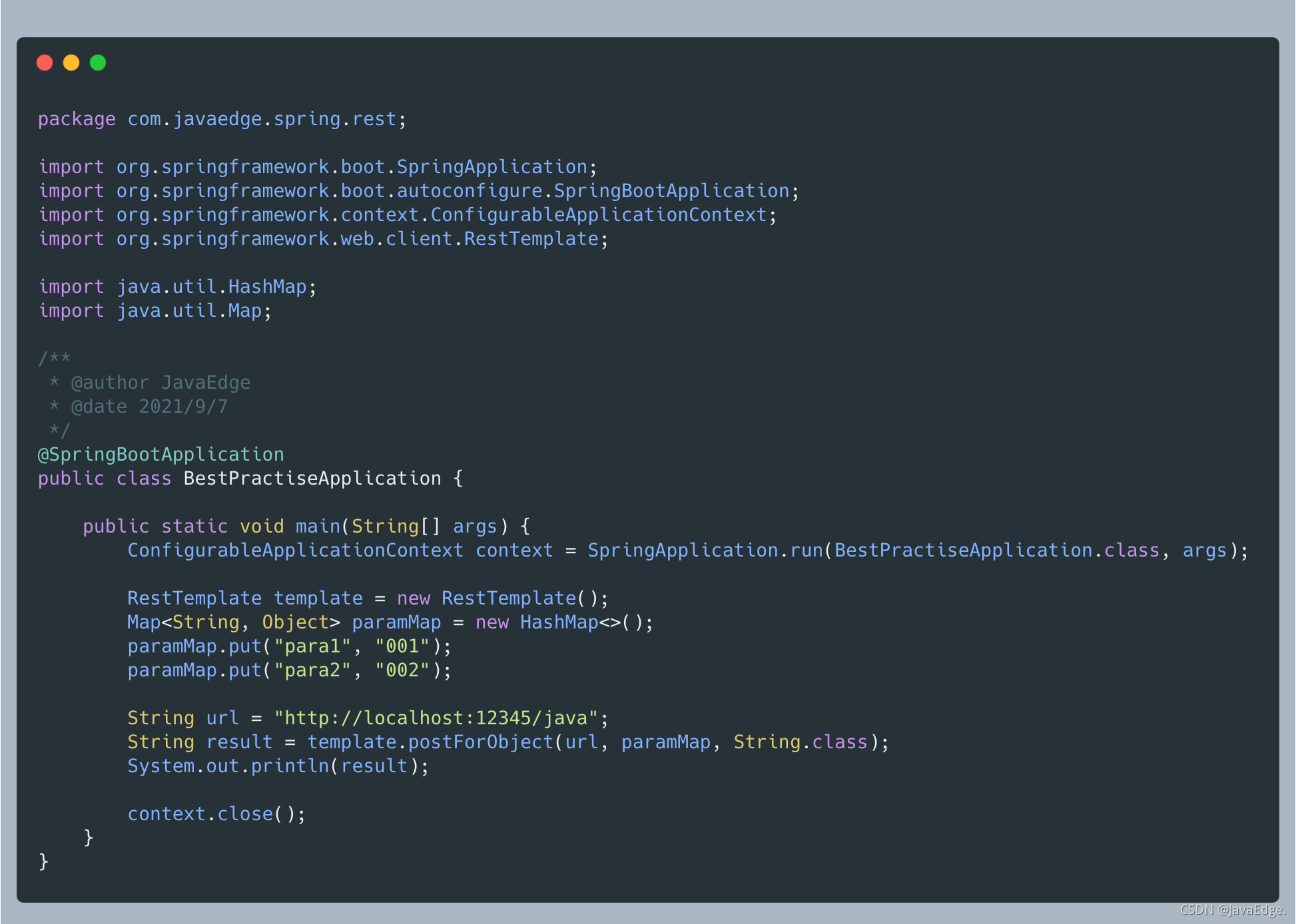

-定义完接口后,使用 RestTemplate 来发送一个这样的表单请求,代码示例如下:

-

-上述代码定义了一个 Map,包含了 2 个表单参数,然后使用 RestTemplate 的 postForObject 提交这个表单。

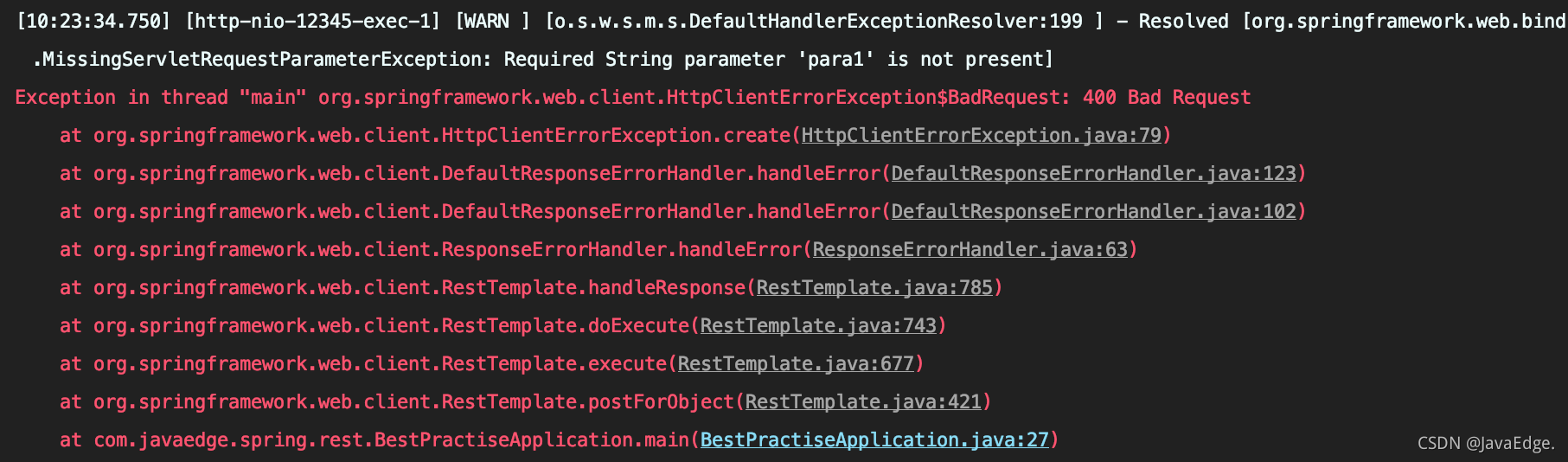

-执行代码提示 400 错误,即请求出错:

-

-就是缺少 para1 表单参数,why?

-# 解析

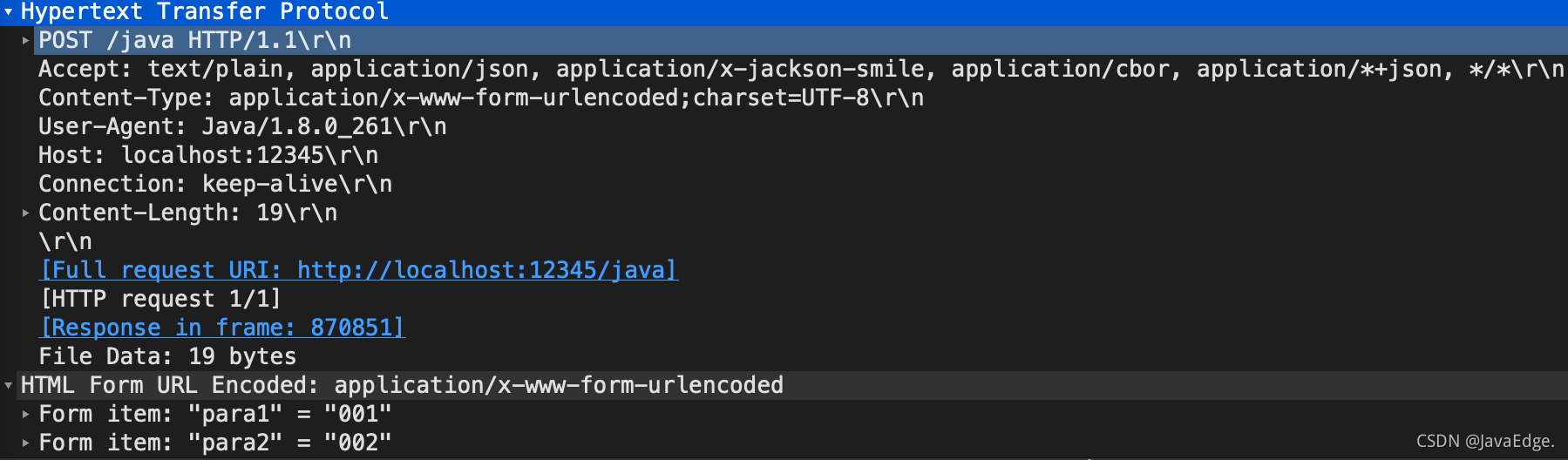

-RestTemplate 提交的表单,最后提交请求啥样?

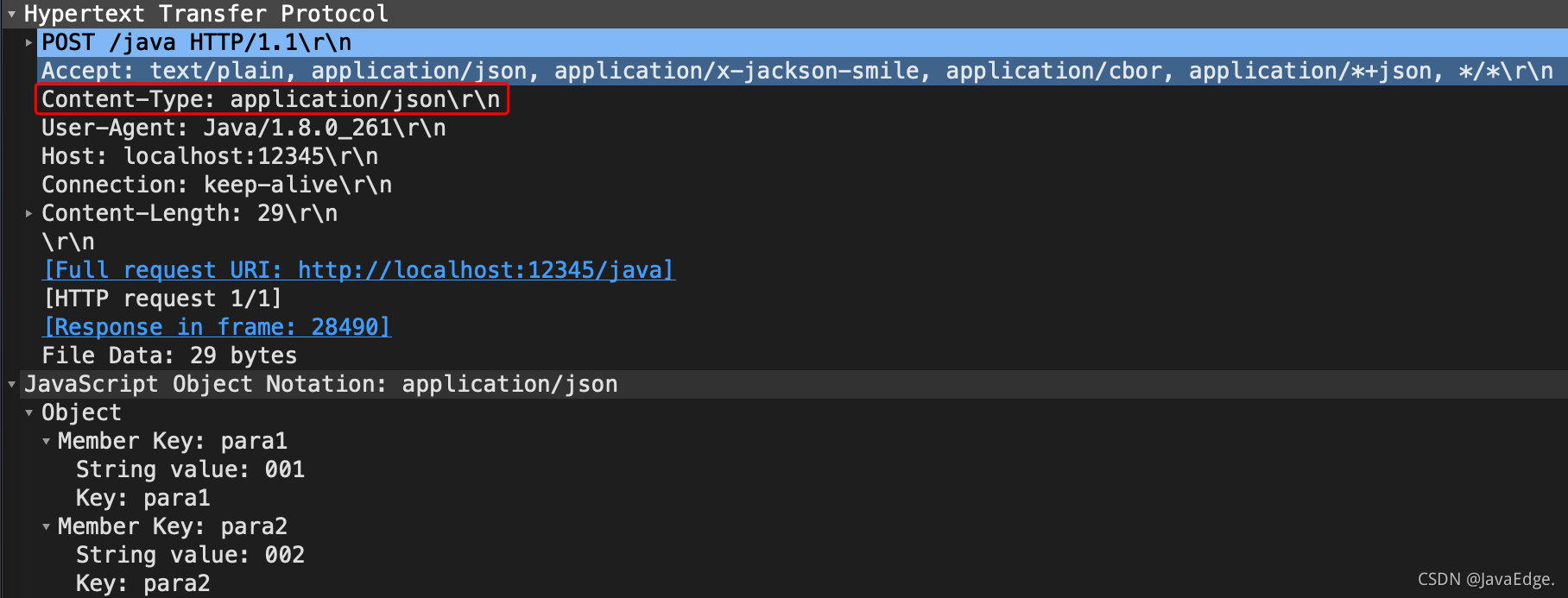

-Wireshark 抓包:

-

-实际上是将定义的表单数据以 JSON 提交过去了,所以我们的接口处理自然取不到任何表单参数。

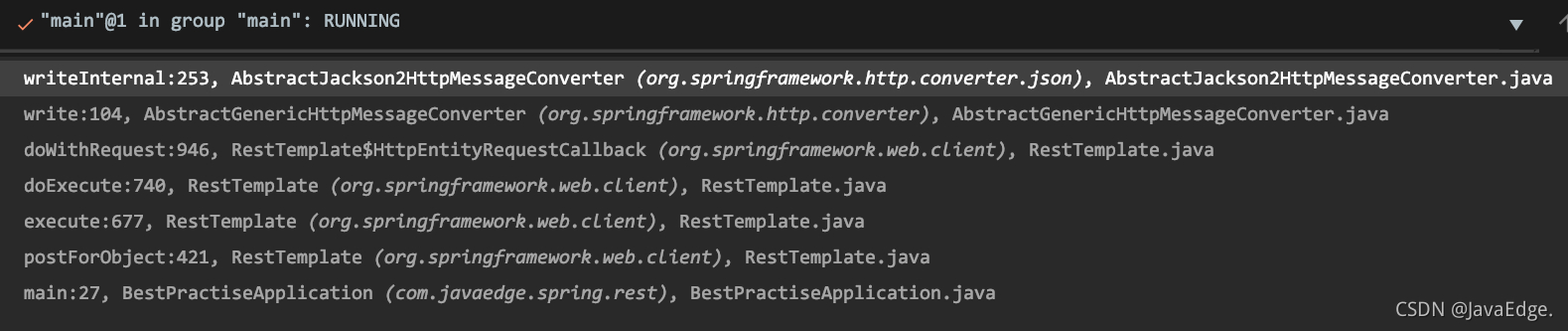

-why?怎么变成 JSON 请求体提交数据呢?注意 RestTemplate 执行调用栈:

-

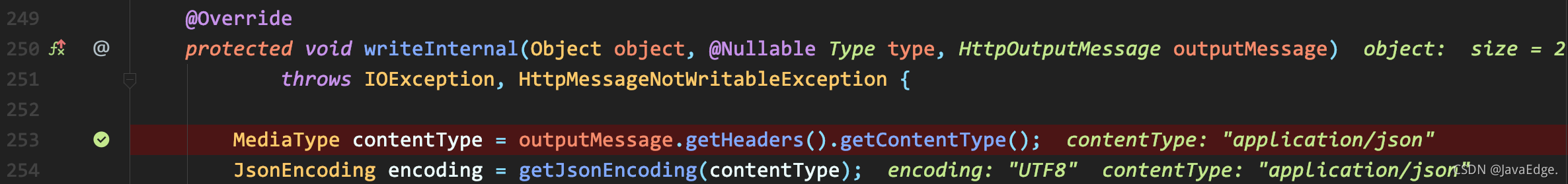

-最终使用的 Jackson 工具序列化了表单

-

-用到 JSON 的关键原因在

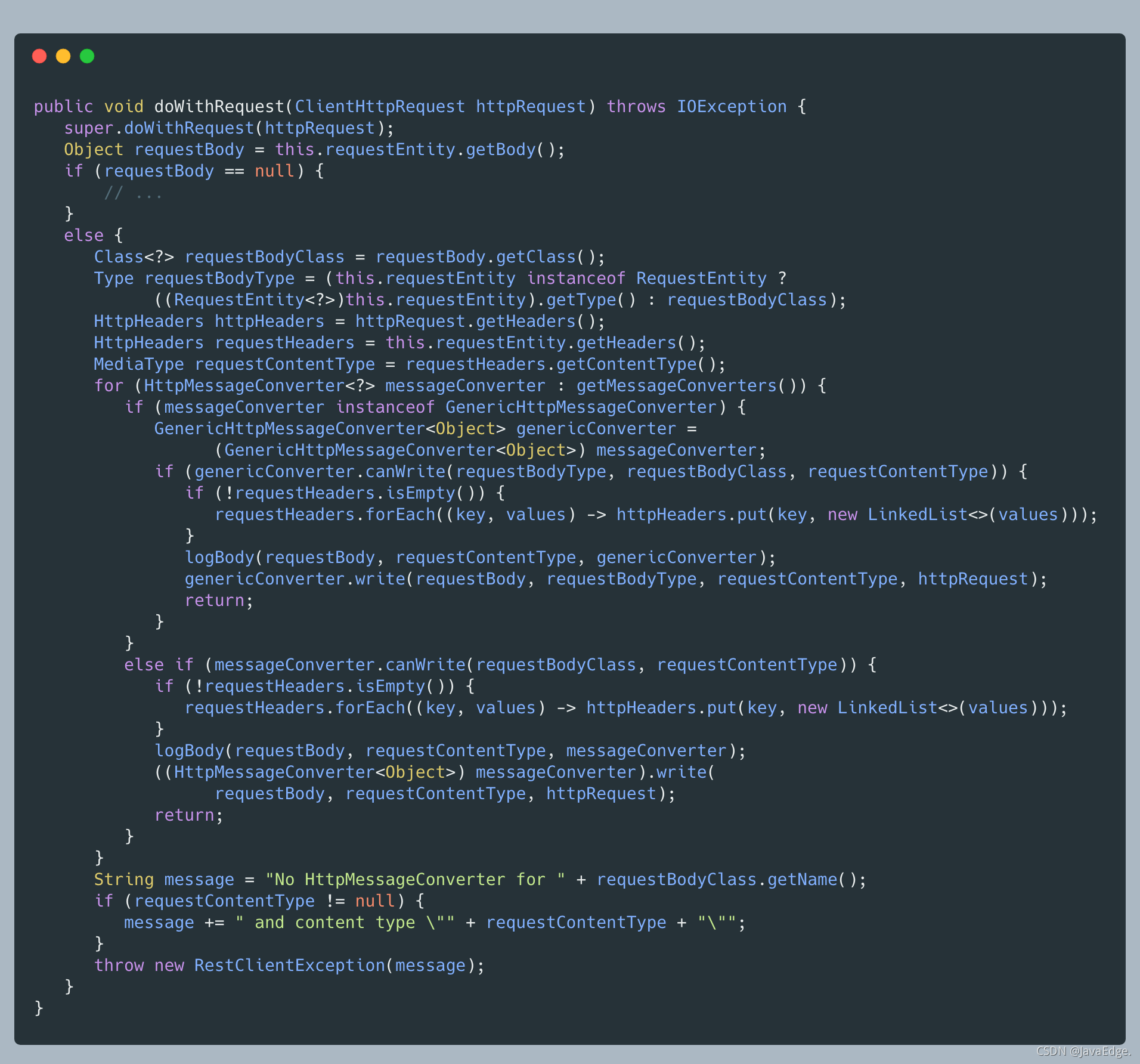

-### RestTemplate.HttpEntityRequestCallback#doWithRequest

-

-根据当前要提交的 Body 内容,遍历当前支持的所有编解码器:

-- 若找到合适编解码器,用之完成 Body 转化

-

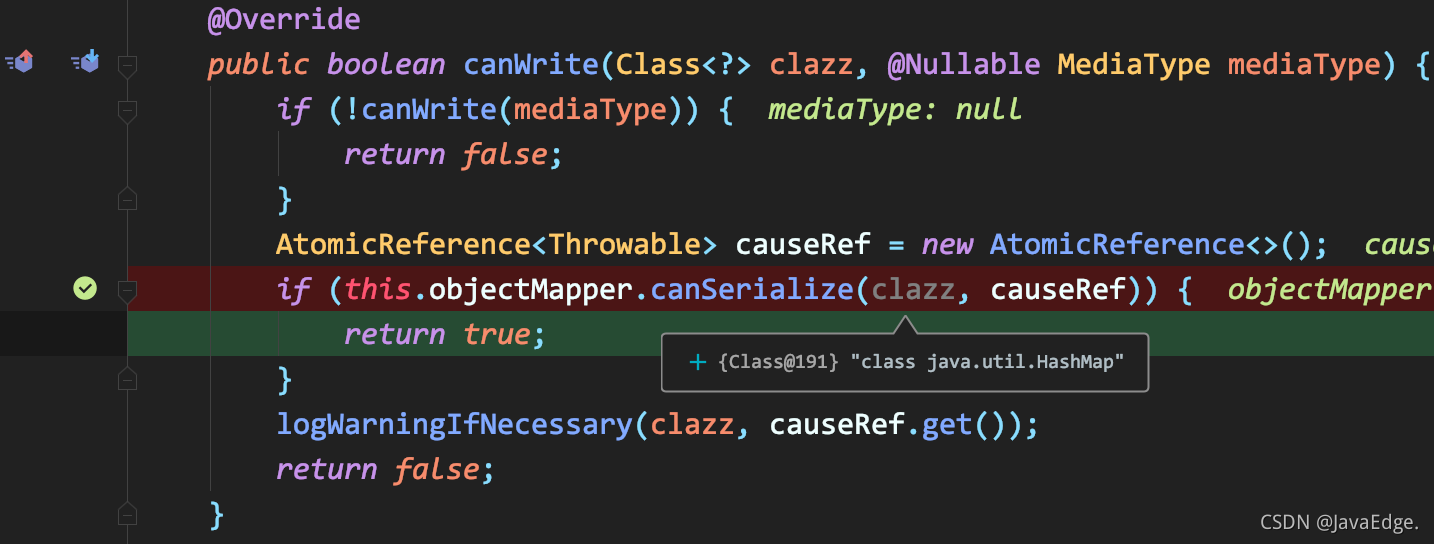

-看下 JSON 的编解码器对是否合适的判断

-### AbstractJackson2HttpMessageConverter#canWrite

-

-可见,当使用的 Body 为 HashMap,是可完成 JSON 序列化的。

-所以后续将这个表单序列化为请求 Body了。

-

-但我还是疑问,为何适应表单处理的编解码器不行?

-那就该看编解码器判断是否支持的实现:

-### FormHttpMessageConverter#canWrite

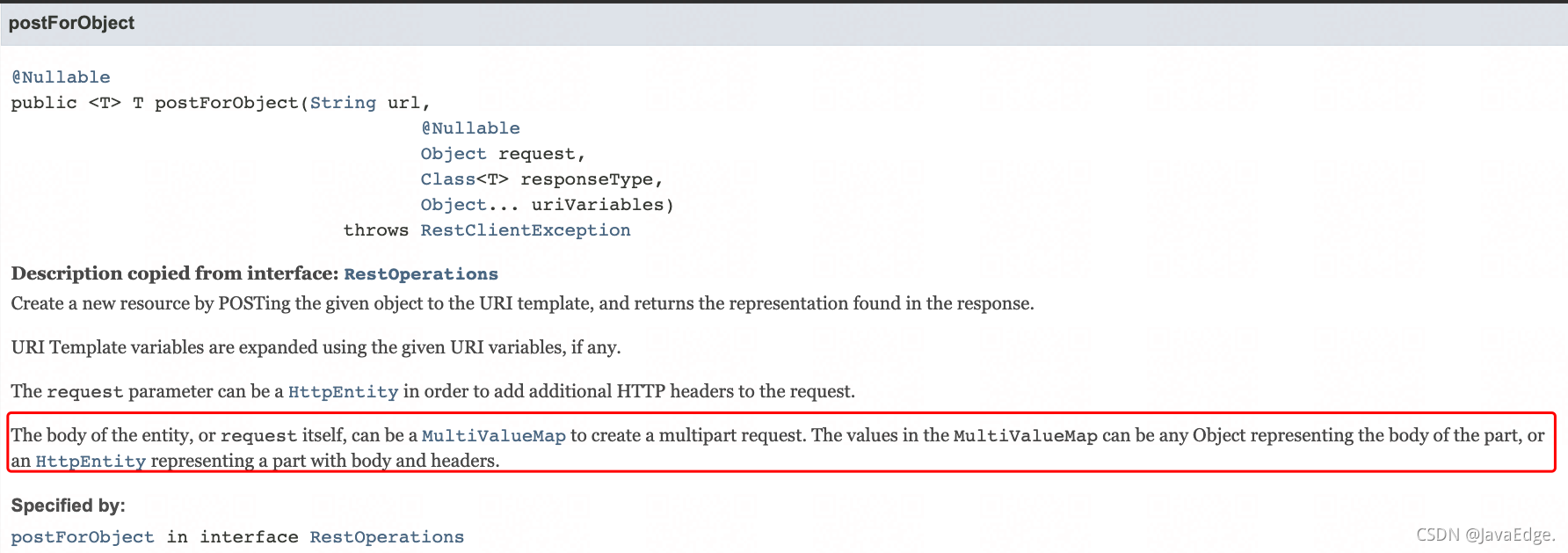

-可见只有当我们发送的 Body 是 MultiValueMap 才能使用表单来提交。

-原来使用 RestTemplate 提交表单必须是 MultiValueMap!

-而我们案例定义的就是普通的 HashMap,最终是按请求 Body 的方式发送出去的。

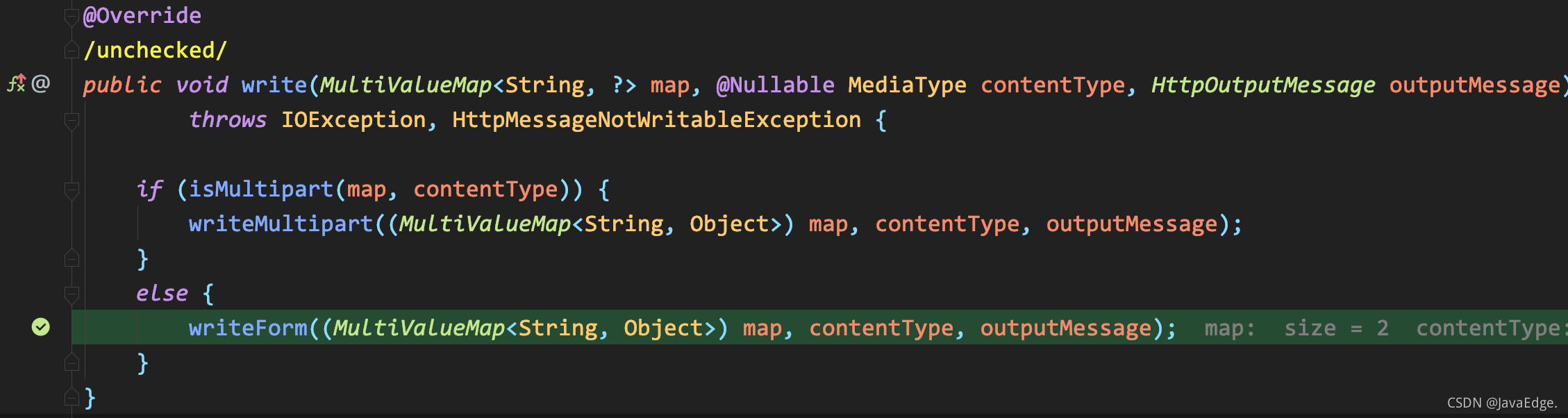

-# 修正

-换成 MultiValueMap 类型存储表单数据即可:

-

-修正后,表单数据最终使用下面的代码进行了编码:

-### FormHttpMessageConverter#write

-

-发送出的数据截图如下:

-

-这就对了!其实官方文档也说明了:

-

-

-> 参考:

-> - https://docs.spring.io/spring-framework/docs/current/javadoc-api/org/springframework/web/client/RestTemplate.html

\ No newline at end of file

diff --git "a/Spring/SpringFramework/Spring Bean347円224円237円345円221円275円345円221円250円346円234円237円347円256円241円347円220円206円.md" "b/Spring/SpringFramework/Spring Bean347円224円237円345円221円275円345円221円250円346円234円237円347円256円241円347円220円206円.md"

index 0f32a53bfe..29d9533994 100644

--- "a/Spring/SpringFramework/Spring Bean347円224円237円345円221円275円345円221円250円346円234円237円347円256円241円347円220円206円.md"

+++ "b/Spring/SpringFramework/Spring Bean347円224円237円345円221円275円345円221円250円346円234円237円347円256円241円347円220円206円.md"

@@ -59,10 +59,9 @@ public interface InitializingBean {

因其实现了InitializingBean接口,其中只有一个方法,且在Bean加载后就执行。该方法可被用来检查是否所有的属性都已设置好。

## 8 BeanPostProcess接口

-Spring将调用它们的postProcessAfterInitialization(后初始化)方法,作用与6一样,只不过6是在Bean初始化前执行,而这是在Bean初始化后执行。

+Spring将调用它们的postProcessAfterInitialization(后初始化)方法,作用与6的一样,只不过6是在Bean初始化前执行,而这个是在Bean初始化后执行。

-

-> 经过以上工作,Bean将一直驻留在应用上下文中给应用使用,直到应用上下文被销毁。

+经过以上工作,Bean将一直驻留在应用上下文中给应用使用,直到应用上下文被销毁。

## 9 DispostbleBean接口

- Spring将调用它的destory方法

@@ -259,28 +258,16 @@ public class GiraffeService implements ApplicationContextAware,

}

```

### BeanPostProcessor

-允许自定义修改新 bean 实例的工厂钩子——如检查标记接口或用代理包装 bean。

-

-- 通过标记接口或类似方式填充bean的后置处理器将实现postProcessBeforeInitialization(java.lang.Object,java.lang.String)

-- 而用代理包装bean的后置处理器通常会实现postProcessAfterInitialization(java.lang.Object,java.lang.String)

-#### Registration

-一个ApplicationContext可在其 Bean 定义中自动检测 BeanPostProcessor Bean,并将这些后置处理器应用于随后创建的任何 Bean。

-普通的BeanFactory允许对后置处理器进行编程注册,将它们应用于通过Bean工厂创建的所有Bean。

-#### Ordering

-在 ApplicationContext 中自动检测的 OrderBeanPostProcessor Bean 将根据 PriorityOrdered 和 Ordered 语义进行排序。

-相比之下,在BeanFactory以编程方式注册的BeanPostProcessor bean将按注册顺序应用

-对于以编程方式注册的后处理器,通过实现 PriorityOrdered 或 Ordered 接口表达的任何排序语义都将被忽略。

-对于 BeanPostProcessor bean,并不考虑 **@Order** 注解。

-

Aware接口是针对某个 **实现这些接口的Bean** 定制初始化的过程,

-Spring还可针对容器中 **所有Bean** 或 **某些Bean** 定制初始化过程,只需提供一个实现BeanPostProcessor接口的实现类。

+Spring同样还可针对容器中 **所有Bean**,或 **某些Bean** 定制初始化过程,只需提供一个实现BeanPostProcessor接口的类即可。

-该接口包含如下方法:

+该接口中包含两个方法:

- postProcessBeforeInitialization

在容器中的Bean初始化之前执行

- postProcessAfterInitialization

在容器中的Bean初始化之后执行

-#### 实例

+

+例子如下:

```java

public class CustomerBeanPostProcessor implements BeanPostProcessor {

@@ -296,10 +283,13 @@ public class CustomerBeanPostProcessor implements BeanPostProcessor {

}

}

```

+

要将BeanPostProcessor的Bean像其他Bean一样定义在配置文件中

+

```xml

-

"},{"parent":"5351911e121f","children":[],"id":"d16b9b53101f","title":"ArrayList线程不安全,线程安全版本的数组容器是Vector

"},{"parent":"5351911e121f","children":[],"id":"5eb59595d8fd","title":"与LinkedList遍历效率对比,性能高很多,ArrayList遍历最大的优势在于内存的连续性,CPU的内部缓存结构会缓存连续的内存片段,可以大幅降低读取内存的性能开销

"}],"id":"5351911e121f","title":"ArrayList"},{"parent":"36d002b102e2","children":[{"parent":"f817a30f5e08","children":[],"id":"e3e17fe90642","title":"数据结构: 双向链表"},{"parent":"f817a30f5e08","children":[],"id":"3846d194c83e","title":"适合插入删除频繁的情况 内部维护了链表的长度

"}],"id":"f817a30f5e08","title":"LinkedList

"}],"id":"36d002b102e2","title":"List"},{"parent":"800ecb4c0776","children":[{"parent":"24f4a3146f29","children":[{"parent":"f4b8a675a029","children":[{"parent":"2353a0a1da34","children":[],"id":"8838e06b887a","title":"数据结构: 数组+链表

"},{"parent":"2353a0a1da34","children":[],"id":"7a82749e87f8","title":"头插法: 新来的值会取代原有的值,原有的值就顺推到链表中去

"},{"parent":"2353a0a1da34","children":[],"id":"c1c3800b1ead","title":"Java7在多线程操作HashMap时可能引起死循环,原因是扩容转移后前后链表顺序倒置,在转移过程中修改了原来链表中节点的引用关系, 可能形成环形链表

"}],"id":"2353a0a1da34","title":"1.7"},{"parent":"f4b8a675a029","children":[{"parent":"cb2e0e4bf56a","children":[],"id":"a743dde50473","title":"数据结构: 数组+链表+红黑树

"},{"parent":"cb2e0e4bf56a","children":[{"parent":"4bb6868f0cc3","children":[],"id":"4753ca9df7aa","title":"根据泊松分布,在负载因子默认为0.75的时候,单个hash槽内元素个数为8的概率小于百万分之一,所以将7作为一个分水岭,等于7的时候不转换,大于等于8的时候才进行转换,小于等于6的时候就化为链表

"}],"id":"4bb6868f0cc3","title":"Hashmap中的链表大小超过八个时会自动转化为红黑树,当删除小于六时重新变为链表

"},{"parent":"cb2e0e4bf56a","children":[],"id":"1c07c2a415b7","title":"尾插法

"},{"parent":"cb2e0e4bf56a","children":[],"id":"94b8ffa2573f","title":"Java8在同样的前提下并不会引起死循环,原因是扩容转移后前后链表顺序不变,保持之前节点的引用关系

"}],"id":"cb2e0e4bf56a","title":"1.8"},{"parent":"f4b8a675a029","children":[{"parent":"6d54a11adce4","children":[],"id":"210d702bbf4a","title":"LoadFactory 默认0.75

"},{"parent":"6d54a11adce4","children":[],"id":"e4055d669e46","title":"

"},{"parent":"6d54a11adce4","children":[{"parent":"af3ec421dc31","children":[],"id":"0c6cfee11d49","title":"因为长度扩大以后,Hash的规则也随之改变

"},{"parent":"af3ec421dc31","children":[],"id":"316f5ac25324","title":"Hash的公式---> index = HashCode(Key) & (Length - 1)

原来长度(Length)是8你位运算出来的值是2 ,新的长度是16位运算出来的值不同

"},{"parent":"af3ec421dc31","children":[],"id":"ff2cf93209dc","title":"HashMap是通过key的HashCode去寻找index的, 如果不进行重写,会出现在一个index中链表的HashCode相等情况,所以要确保相同的对象返回相同的hash值,不同的对象返回不同的hash值,必须要重写equals

"}],"id":"af3ec421dc31","title":"为什么要ReHash而不进行复制?

"}],"id":"6d54a11adce4","title":"扩容机制"},{"parent":"f4b8a675a029","children":[{"parent":"c6437e8e4f45","children":[],"id":"cb7132ed82f1","title":"HashMap源码中put/get方法都没有加同步锁, 无法保证上一秒put的值,下一秒get的时候还是原值,所以线程安全无法保证

"},{"parent":"c6437e8e4f45","children":[{"parent":"9d6423986311","children":[],"id":"b40b3d560950","title":"Collections.synchronizedMap(Map)"},{"parent":"9d6423986311","children":[{"parent":"cdcaf61438fc","children":[{"parent":"404e074072c9","children":[{"parent":"b926b8dd14c9","children":[],"id":"6bf370941ab4","title":"安全失败机制: 这种机制会使你此次读到的数据不一定是最新的数据。

如果你使用null值,就会使得其无法判断对应的key是不存在还是为空,因为你无法再调用一次contain(key)来对key是否存在进行判断,ConcurrentHashMap同理"}],"id":"b926b8dd14c9","title":"Hashtable 是不允许键或值为 null 的,HashMap 的键值则都可以为 null"},{"parent":"404e074072c9","children":[],"id":"c4669cca3ca2","title":"Hashtable 继承了 Dictionary类,而 HashMap 继承的是 AbstractMap 类"},{"parent":"404e074072c9","children":[],"id":"63a75cf7717b","title":"HashMap 的初始容量为:16,Hashtable 初始容量为:11,两者的负载因子默认都是:0.75"},{"parent":"404e074072c9","children":[],"id":"8e6e339c6351","title":"当现有容量大于总容量 * 负载因子时,HashMap 扩容规则为当前容量翻倍,Hashtable 扩容规则为当前容量翻倍 + 1"},{"parent":"404e074072c9","children":[{"parent":"13aa27708fb1","children":[],"id":"e72d018a877c","title":"快速失败(fail—fast)是java集合中的一种机制, 在用迭代器遍历一个集合对象时,如果遍历过程中对集合对象的内容进行了修改(增加、删除、修改),则会抛出ConcurrentModificationException"}],"id":"13aa27708fb1","title":"HashMap 中的 Iterator 迭代器是 fail-fast 的,而 Hashtable 的 Enumerator 不是 fail-fast 的. 所以,当其他线程改变了HashMap 的结构,如:增加、删除元素,将会抛出ConcurrentModificationException 异常,而 Hashtable 则不会"}],"id":"404e074072c9","title":"与HahsMap的区别"}],"id":"cdcaf61438fc","title":"Hashtable"},{"parent":"9d6423986311","children":[],"id":"3aa13f74a283","title":"ConcurrentHashMap"}],"id":"9d6423986311","title":"确保线程安全的方式"}],"id":"c6437e8e4f45","title":"线程不安全

"},{"parent":"f4b8a675a029","children":[{"parent":"83e802bbbf44","children":[],"id":"6689f5a28d8d","title":"创建HashMap时最好赋初始值, 而且最好为2的幂,为了位运算的方便

"},{"parent":"83e802bbbf44","children":[{"parent":"33f1a38f62cd","children":[],"id":"245253ad18f8","title":"实现均匀分布, 在使用不是2的幂的数字的时候,Length-1的值是所有二进制位全为1,这种情况下,index的结果等同于HashCode后几位的值,只要输入的HashCode本身分布均匀,Hash算法的结果就是均匀的

"}],"id":"33f1a38f62cd","title":"默认初始化大小为16

"}],"id":"83e802bbbf44","title":"初始化

"},{"parent":"f4b8a675a029","children":[],"id":"1bf62446136a","title":"重写equals必须重写HashCode

"}],"id":"f4b8a675a029","title":"HashMap

"},{"parent":"24f4a3146f29","children":[{"parent":"099533450f79","children":[{"parent":"6c4d02753b0b","children":[],"id":"1bf9ad750241","title":"这种机制会使你此次读到的数据不一定是最新的数据。如果你使用null值,就会使得其无法判断对应的key是不存在还是为空,因为你无法再调用一次contain(key)来对key是否存在进行判断,HashTable同理

"}],"id":"6c4d02753b0b","title":"安全失败机制

"},{"parent":"099533450f79","children":[{"parent":"238f89a01639","children":[],"id":"9a49309bdc28","title":"数据结构: 数组+链表 (Segment 数组、HashEntry 组成)

"},{"parent":"238f89a01639","children":[{"parent":"940aa3e4031c","children":[],"id":"6d0c1ad1257f","title":"HashEntry跟HashMap差不多的,但是不同点是,他使用volatile去修饰了他的数据Value还有下一个节点next

"}],"id":"940aa3e4031c","title":"HashEntry

"},{"parent":"238f89a01639","children":[{"parent":"214133bb3335","children":[{"parent":"c94b076d3d2d","children":[],"id":"ccbe178d4298","title":"继承了ReentrantLock

"},{"parent":"c94b076d3d2d","children":[],"id":"8e8ef1826155","title":"每当一个线程占用锁访问一个 Segment 时,不会影响到其他的 Segment。

如果容量大小是16他的并发度就是16,可以同时允许16个线程操作16个Segment而且还是线程安全的。

"}],"id":"c94b076d3d2d","title":"segment分段锁

"},{"parent":"214133bb3335","children":[{"parent":"d93302980fe7","children":[],"id":"26bfa3a12ad8","title":"尝试自旋获取锁,如果获取失败肯定就有其他线程存在竞争,则利用 scanAndLockForPut() 自旋获取锁

"},{"parent":"d93302980fe7","children":[],"id":"b933436ce63c","title":"如果重试的次数达到了 MAX_SCAN_RETRIES 则改为阻塞锁获取,保证能获取成功

"}],"id":"d93302980fe7","title":"put

"},{"parent":"214133bb3335","children":[{"parent":"e791448ffbae","children":[],"id":"65b8e0356631","title":"由于 HashEntry 中的 value 属性是用 volatile 关键词修饰的,保证了内存可见性,所以每次获取时都是最新值

"},{"parent":"e791448ffbae","children":[],"id":"1748a490b610","title":"ConcurrentHashMap 的 get 方法是非常高效的,因为整个过程都不需要加锁

"}],"id":"e791448ffbae","title":"get

"}],"id":"214133bb3335","title":"并发度高的原因

"}],"id":"238f89a01639","title":"1.7

"},{"parent":"099533450f79","children":[{"parent":"bb9fcd3cdf24","children":[],"id":"cefe93491aa8","title":"数组+链表+红黑树

"},{"parent":"bb9fcd3cdf24","children":[{"parent":"7f1ff4d86665","children":[],"id":"122b03888ad2","title":"抛弃了原有的 Segment 分段锁,而采用了 CAS + synchronized 来保证并发安全性

"}],"id":"7f1ff4d86665","title":"区别

"},{"parent":"bb9fcd3cdf24","children":[{"parent":"292fc2483f4c","children":[],"id":"1181a0ece609","title":"根据 key 计算出 hashcode

"},{"parent":"292fc2483f4c","children":[],"id":"17e33984c1e5","title":"判断是否需要进行初始化

"},{"parent":"292fc2483f4c","children":[],"id":"d0bfa1371c32","title":"即为当前 key 定位出的 Node,如果为空表示当前位置可以写入数据,利用 CAS 尝试写入,失败则自旋保证成功

"},{"parent":"292fc2483f4c","children":[],"id":"1ddaa88f7963","title":"如果当前位置的 hashcode == MOVED == -1,则需要进行扩容

"},{"parent":"292fc2483f4c","children":[],"id":"5735982de5ef","title":"如果都不满足,则利用 synchronized 锁写入数据

"},{"parent":"292fc2483f4c","children":[],"id":"4e006a2b0550","title":"如果数量大于 TREEIFY_THRESHOLD 则要转换为红黑树

"}],"id":"292fc2483f4c","title":"put操作

"},{"parent":"bb9fcd3cdf24","children":[{"parent":"92654a95d638","children":[],"id":"02394dd149e1","title":"根据计算出来的 hashcode 寻址,如果就在桶上那么直接返回值

"},{"parent":"92654a95d638","children":[],"id":"d7b487f3b69b","title":"如果是红黑树那就按照树的方式获取值

"},{"parent":"92654a95d638","children":[],"id":"1c6f9d2d5016","title":"就不满足那就按照链表的方式遍历获取值

"}],"id":"92654a95d638","title":"get操作

"}],"collapsed":false,"id":"bb9fcd3cdf24","title":"1.8

"}],"id":"099533450f79","title":"ConcurrentHashMap

"}],"collapsed":false,"id":"24f4a3146f29","title":"Map"},{"parent":"800ecb4c0776","children":[{"parent":"e7046be1aafc","children":[{"parent":"bb49195cb4a7","children":[],"link":{"title":"https://mp.weixin.qq.com/s/0cMrE87iUxLBw_qTBMYMgA","type":"url","value":"https://mp.weixin.qq.com/s/0cMrE87iUxLBw_qTBMYMgA"},"id":"5448b1ead28d","title":"同步容器(如Vector)的所有操作一定是线程安全的吗?"}],"id":"bb49195cb4a7","title":"相关文档"}],"id":"e7046be1aafc","title":"Vector"},{"parent":"800ecb4c0776","children":[{"parent":"6062a460b43c","children":[{"parent":"d695fc5d8846","children":[],"id":"84bbc18befc1","title":"底层实现的就是HashMap,所以是根据HashCode来判断是否是重复元素

"},{"parent":"d695fc5d8846","children":[],"id":"27e06a58782e","title":"初始化容量是:16, 因为底层实现的是HashMap。加载因子是0.75

"},{"parent":"d695fc5d8846","children":[],"id":"dbcb9b76077a","title":"无序的

"},{"parent":"d695fc5d8846","children":[],"id":"2c468ef34635","title":"HashSet不能根据索引去数据,所以不能用普通的for循环来取出数据,应该用增强for循环,查询性能不好

"}],"id":"d695fc5d8846","title":"HashSet

"},{"parent":"6062a460b43c","children":[{"parent":"3e5fc407007b","children":[],"id":"34347150ba65","title":"底层是实现的TreeMap

"},{"parent":"3e5fc407007b","children":[],"id":"3ead4a788049","title":"元素不能够重复,可以有一个null值,并且这个null值一直在第一个位置上

"},{"parent":"3e5fc407007b","children":[],"id":"432c37f69b42","title":"默认容量:16,加载因子是0.75

"},{"parent":"3e5fc407007b","children":[],"id":"1bf8f3a4b2c3","title":"TreeMap是有序的,这个有序不是存入的和取出的顺序是一样的,而是根据自然规律拍的序

"}],"id":"3e5fc407007b","title":"TreeSet

"}],"id":"6062a460b43c","title":"Set"}],"collapsed":true,"id":"800ecb4c0776","title":"集合"},{"parent":"root","lineStyle":{"randomLineColor":"#F4325C"},"children":[],"id":"ef690530e935","title":"基础"},{"parent":"root","lineStyle":{"randomLineColor":"#A04AFB"},"children":[{"parent":"d61da867cb10","children":[{"parent":"c56b85ccc7f0","children":[],"id":"cd07e14ad850","title":"虚拟机堆

"},{"parent":"c56b85ccc7f0","children":[],"id":"5f3d1d3f67c2","title":"虚拟机栈

"},{"parent":"c56b85ccc7f0","children":[],"id":"b44fa08a79e3","title":"方法区

"},{"parent":"c56b85ccc7f0","children":[],"id":"38e4ffe62590","title":"本地方法栈

"},{"parent":"c56b85ccc7f0","children":[],"id":"6b76f89e7451","title":"程序计数器

"}],"id":"c56b85ccc7f0","title":"Java内存区域

"},{"parent":"d61da867cb10","children":[{"parent":"08f416fb625f","children":[],"id":"95995454f91e","title":"加载->验证->准备->解析->初始化->使用->卸载

"},{"parent":"08f416fb625f","children":[{"parent":"28edebbeb4d6","children":[],"id":"fddd8578d67e","title":"父类加载 不重复加载

"}],"id":"28edebbeb4d6","title":"双亲委派原则

"},{"parent":"08f416fb625f","children":[{"parent":"ff18f9026678","children":[],"id":"ea8db2744206","title":"第一次,在JDK1.2以前,双亲委派模型在JDK1.2引入,ClassLoder在最初已经存在了,为了兼容已有代码,添加了findClass()方法,如果父类加载失败会自动调用findClass()来完成加载

"},{"parent":"ff18f9026678","children":[],"id":"5c240d502f24","title":"第二次,由双亲委派模型缺陷导致,由于双亲委派越基础的类由越上层的加载器进行加载,如果有基础类型调回用户代码回无法解决而产生,出现线程上下文类加载器,会出现父类加载器请求子类加载器完成类加载的行为

"},{"parent":"ff18f9026678","children":[],"id":"5d62862bf3e0","title":"第三次,代码热替换、模块热部署,典型:OSGi每一个程序模块都有一个自己的类加载器

"}],"id":"ff18f9026678","title":"破坏双亲委派模型

"}],"id":"08f416fb625f","title":"类得加载机制"},{"parent":"d61da867cb10","children":[{"parent":"52bb18b06c6e","children":[],"id":"f61579e4d22a","title":"新生代/年轻代

"},{"parent":"52bb18b06c6e","children":[],"id":"72db835ecada","title":"老年代

"},{"parent":"52bb18b06c6e","children":[{"parent":"559cb847a3c3","children":[{"parent":"48946b525dfd","children":[],"id":"b7b6b8d8f03d","title":"字符串存在永久代中,容易出现性能问题和内存溢出

"},{"parent":"48946b525dfd","children":[],"id":"2f7df7f20543","title":"类及方法的信息等比较难确定其大小,因此对于永久代的大小指定比较困难,太小容易出现永久代溢出,太大则容易导致老年代溢出

"},{"parent":"48946b525dfd","children":[],"id":"5439cd0f53c8","title":"永久代会为 GC 带来不必要的复杂度,并且回收效率偏低

"},{"parent":"48946b525dfd","children":[],"id":"9c36c0817672","title":"将 HotSpot 与 JRockit 合二为一

"}],"id":"48946b525dfd","title":"为什么要使用元空间取代永久代的实现?

"},{"parent":"559cb847a3c3","children":[{"parent":"1d6773397050","children":[],"id":"c33f0e9fe53f","title":"元空间并不在虚拟机中,而是使用本地内存。因此默认情况下,元空间的大小仅受本地内存限制

"}],"id":"1d6773397050","title":"元空间与永久代区别

"},{"parent":"559cb847a3c3","children":[{"parent":"afb5323c83d0","children":[],"id":"fbf6f9baf099","title":"-XX:MetaspaceSize:初始空间大小,达到该值会触发垃圾收集进行类型卸载,同时GC会对该值进行调整:如果释放了大量的空间,就适当降低该值;如果释放了很少的空间,那么在不超过MaxMetaspaceSize时,适当提高该值

"},{"parent":"afb5323c83d0","children":[],"id":"8e0c80084aef","title":"-XX:MaxMetaspaceSize:最大空间,默认是没有限制的

"}],"id":"afb5323c83d0","title":"元空间空间大小设置

"}],"id":"559cb847a3c3","title":"永久代/元空间

"},{"parent":"52bb18b06c6e","children":[{"parent":"34170f8e7332","children":[],"id":"9d5df7c5e2eb","title":"根据存活时间

"}],"id":"34170f8e7332","title":"晋升机制

"}],"id":"52bb18b06c6e","title":"分代回收

"},{"parent":"d61da867cb10","children":[{"parent":"c465e3a953e3","children":[{"parent":"2e9e41cbb0e4","children":[],"id":"6ff93afab3be","title":"绝大多数对象都是朝生熄灭的

"}],"id":"2e9e41cbb0e4","title":"弱分代假说

"},{"parent":"c465e3a953e3","children":[{"parent":"759979ad09cd","children":[],"id":"a152f857c10b","title":"熬过越多次垃圾收集过程的对象就越难以消亡

"}],"id":"759979ad09cd","title":"强分代假说

"},{"parent":"c465e3a953e3","children":[{"parent":"4843a46b8196","children":[],"id":"6481cbce8487","title":"跨代引用相对于同代引用来说仅占极少数

"}],"id":"4843a46b8196","title":"跨代引用假说

"}],"id":"c465e3a953e3","title":"分代收集理论

"},{"parent":"d61da867cb10","children":[{"parent":"85ab22cf15b4","children":[],"id":"0c42af972e09","title":""GC Roots"的根对象作为起始节点集,从这些节点开始,根据引用关系向下搜索,如果某个对象到GC Roots间没有任何引用链相连接,则证明此对象是不可能再被使用的

"}],"id":"85ab22cf15b4","title":"可达性分析算法

"},{"parent":"d61da867cb10","children":[{"parent":"133b15c6580f","children":[{"parent":"9bd71d4114f3","children":[],"id":"e602b5dbf42a","title":"强引用是使用最普遍的引用。如果一个对象具有强引用,那垃圾回收器绝不会回收它; 当内存空间不足时,Java虚拟机宁愿抛出OutOfMemoryError错误,使程序异常终止,也不会靠随意回收具有强引用的对象来解决内存不足的问题。

"}],"id":"9bd71d4114f3","title":"强引用 (StrongReference)

"},{"parent":"133b15c6580f","children":[{"parent":"a4d69c685996","children":[],"id":"aaedb29f6e8f","title":"如果一个对象只具有软引用,则内存空间充足时,垃圾回收器就不会回收它;如果内存空间不足了,就会回收这些对象的内存。

"}],"id":"a4d69c685996","title":"软引用 (SoftReference)

"},{"parent":"133b15c6580f","children":[{"parent":"6cdc46b73fd3","children":[],"id":"7acc452f65ab","title":"在垃圾回收器线程扫描它所管辖的内存区域的过程中,一旦发现了只具有弱引用的对象,不管当前内存空间足够与否,都会回收它的内存。

"}],"id":"6cdc46b73fd3","title":"弱引用 (WeakReference)

"},{"parent":"133b15c6580f","children":[{"parent":"5f58e345e898","children":[],"id":"d189c9591839","title":"如果一个对象仅持有虚引用,那么它就和没有任何引用一样,在任何时候都可能被垃圾回收器回收

"}],"id":"5f58e345e898","title":"虚引用 (PhantomReference)

"}],"id":"133b15c6580f","title":"引用

"},{"parent":"d61da867cb10","children":[{"parent":"7a6e56139d11","children":[{"parent":"39f66c681caf","children":[{"parent":"5303c5abf34b","children":[],"id":"e983d3f5fd4a","title":"对象存活较多情况

"},{"parent":"5303c5abf34b","children":[],"id":"b9065a228c05","title":"老年代

"}],"id":"5303c5abf34b","title":"适用场景

"},{"parent":"39f66c681caf","children":[{"parent":"733f3bc0b7ab","children":[],"id":"4f9fdc7ae82e","title":"内存空间碎片化

"},{"parent":"733f3bc0b7ab","children":[],"id":"2a97115e02e3","title":"由于空间碎片导致的提前GC

"},{"parent":"733f3bc0b7ab","children":[{"parent":"706cff5bc691","children":[],"id":"7a5e8f6db9e2","title":"标记需清除或存货对象

"},{"parent":"706cff5bc691","children":[],"id":"3da1d4f2af71","title":"清除标记或未标记对象

"}],"id":"706cff5bc691","title":"扫描了两次

"}],"id":"733f3bc0b7ab","title":"缺点

"}],"id":"39f66c681caf","title":"标记请除

"},{"parent":"7a6e56139d11","children":[{"parent":"463d39f131e0","children":[{"parent":"cc3d3a846262","children":[],"id":"ad8382025fe4","title":"存活对象少比较高效

"},{"parent":"cc3d3a846262","children":[],"id":"fb2a5786103c","title":"扫描了整个空间(标记存活对象并复制移动)

"},{"parent":"cc3d3a846262","children":[],"id":"efebc696f64e","title":"年轻代

"}],"id":"cc3d3a846262","title":"适用场景

"},{"parent":"463d39f131e0","children":[{"parent":"4a07efc633b8","children":[],"id":"ca57a2a2591a","title":"需要空闲空间

"},{"parent":"4a07efc633b8","children":[],"id":"74b68744b877","title":"老年代作为担保空间

"},{"parent":"4a07efc633b8","children":[],"id":"9a2b53e3948c","title":"复制移动对象

"}],"id":"4a07efc633b8","title":"缺点

"}],"id":"463d39f131e0","title":"标记复制

"},{"parent":"7a6e56139d11","children":[{"parent":"6e91691d76fb","children":[{"parent":"ca79fb0161d2","children":[],"id":"3093a8344bf8","title":"对象存活较多情况

"},{"parent":"ca79fb0161d2","children":[],"id":"171500353dee","title":"老年代

"}],"id":"ca79fb0161d2","title":"适用场景

"},{"parent":"6e91691d76fb","children":[{"parent":"2e00b69db6db","children":[],"id":"9cc7fd616235","title":"移动存活对象并更新对象引用

"},{"parent":"2e00b69db6db","children":[],"id":"9f173f5f0a02","title":"Stop The World

"}],"id":"2e00b69db6db","title":"缺点

"}],"id":"6e91691d76fb","title":"标记整理

"},{"parent":"7a6e56139d11","children":[{"parent":"873b4393cb04","children":[],"id":"e55f55cabd04","title":"没办法解决循环引用的问题

"}],"id":"873b4393cb04","title":"引用计数

"}],"id":"7a6e56139d11","title":"垃圾回收机制

"},{"parent":"d61da867cb10","children":[{"parent":"94c48a9cef88","children":[{"parent":"1871a079226a","children":[{"parent":"ec2e2387d0dc","children":[{"parent":"1e82c0b474da","children":[],"id":"4ae444f631e6","title":"Eden

"},{"parent":"1e82c0b474da","children":[],"id":"2e6db7cc3c33","title":"Survivor1

"},{"parent":"1e82c0b474da","children":[],"id":"c07a1cd16c8f","title":"Survivor2

"},{"parent":"1e82c0b474da","children":[{"parent":"150da6938c96","children":[],"id":"79ab826115b2","title":"通过阈值晋升

"}],"id":"150da6938c96","title":"Minor GC

"}],"id":"1e82c0b474da","title":"年轻代"},{"parent":"ec2e2387d0dc","children":[{"parent":"c2b0ef70d896","children":[],"id":"69438d9521b8","title":"Major GC 等价于 Full GC

"}],"id":"c2b0ef70d896","title":"老年代

"},{"parent":"ec2e2387d0dc","children":[],"id":"bb363f2d06eb","title":"永久"}],"id":"ec2e2387d0dc","title":"分代情况

"},{"parent":"1871a079226a","children":[{"parent":"ef9b2adfad54","children":[],"id":"f8a938786281","title":"对CPU资源敏感

"},{"parent":"ef9b2adfad54","children":[],"id":"f34402ad150a","title":"无法处理浮动垃圾

"},{"parent":"ef9b2adfad54","children":[],"id":"1b39a82e9c8c","title":"基于标记清除算法 大量空间碎片

"}],"id":"ef9b2adfad54","title":"缺点

"}],"id":"1871a079226a","title":"CMS

"},{"parent":"94c48a9cef88","children":[{"parent":"9b2c5a694136","children":[],"id":"d3247834f765","title":"分区概念 弱化分代

"},{"parent":"9b2c5a694136","children":[{"parent":"0ac46d282191","children":[],"id":"1eba7428e805","title":"不会产生碎片空间,分配大对象不会提前Full GC

"}],"id":"0ac46d282191","title":"标记整理算法

"},{"parent":"9b2c5a694136","children":[{"parent":"20775041ea09","children":[],"id":"df43ec84ba90","title":"使用参数-XX:MaxGCPauseMills,默认为200毫秒,优先处理回收价值收集最大的Region

"}],"id":"20775041ea09","title":"允许用户设置收集的停顿时间

"},{"parent":"9b2c5a694136","children":[],"id":"0831e78ce2d4","title":"利用CPU多核条件,缩短STW时间

"},{"parent":"9b2c5a694136","children":[],"id":"00fc277cb4e8","title":"原始快照算法(SATB)保证收集线程与用户线程互不干扰,避免标记结果出错

"},{"parent":"9b2c5a694136","children":[{"parent":"64ddc0929330","children":[{"parent":"6bda8393ce9e","children":[],"id":"2194b30b8023","title":"标记STW从GC Roots开始直接可达的对象,借用Minor GC时同步完成

"}],"id":"6bda8393ce9e","title":"初始标记

"},{"parent":"64ddc0929330","children":[{"parent":"8954c96bf47e","children":[],"id":"11402483ace1","title":"从GC Roots开始对堆对象进行可达性分析,找出要回收的对象,与用户程序并发执行,重新处理SATB记录下的并发时引用变动对象

"}],"id":"8954c96bf47e","title":"并发标记

"},{"parent":"64ddc0929330","children":[{"parent":"dcca5f39bc8c","children":[],"id":"2044b2d42f4a","title":"处理并发阶段结束后遗留下来的少量SATB记录

"}],"id":"dcca5f39bc8c","title":"最终标记

"},{"parent":"64ddc0929330","children":[{"parent":"32a05d0acfd4","children":[],"id":"1c5bed528018","title":"根据用户期待的GC停顿时间制定回收计划

"}],"id":"32a05d0acfd4","title":"筛选回收

"}],"id":"64ddc0929330","title":"收集步骤

"},{"parent":"9b2c5a694136","children":[{"parent":"144086fdf009","children":[{"parent":"70dcc7b7411a","children":[{"parent":"bf3ba1d7ab1a","children":[],"id":"6d91b336c4b7","title":"复制一些存活对象到Old区、Survivor区

"}],"id":"bf3ba1d7ab1a","title":"回收所有Eden、Survivor区

"}],"id":"70dcc7b7411a","title":"Minor GC/Young GC

"},{"parent":"144086fdf009","children":[],"id":"44c8c447963a","title":"Mixed GC

"}],"id":"144086fdf009","title":"回收模式

"}],"id":"9b2c5a694136","title":"G1

"},{"parent":"94c48a9cef88","children":[{"parent":"8e74a22eafd1","children":[],"id":"642ff9bd8dbb","title":"G1分区域 每个区域是有老年代概念的,但是收集器以整个区域为单位收集

"},{"parent":"8e74a22eafd1","children":[],"id":"affafbc6a4da","title":"G1回收后马上合并空闲内存,而CMS会在STW的时候合并

"}],"id":"8e74a22eafd1","title":"CMS与G1的区别

"}],"id":"94c48a9cef88","title":"垃圾回收器

"},{"parent":"d61da867cb10","children":[{"parent":"1dfd2da1ccb2","children":[],"id":"09de7462c062","title":"老年代空间不足

"},{"parent":"1dfd2da1ccb2","children":[],"id":"7681c3724945","title":"system.gc()通知JVM进行Full GC

"},{"parent":"1dfd2da1ccb2","children":[],"id":"d1038ecedd23","title":"持久代空间不足

"}],"id":"1dfd2da1ccb2","title":"Full GC

"},{"parent":"d61da867cb10","children":[{"parent":"a7686c6448fb","children":[],"id":"750a3f631db0","title":"在执行垃圾收集算法时,Java应用程序的其他所有线程都被挂起。是Java中一种全局暂停现象,全局停顿,所有Java代码停止,Native代码可以执行,但不能与JVM交互

"}],"id":"a7686c6448fb","title":"STW(Stop The World)

"},{"parent":"d61da867cb10","children":[{"parent":"a7d301228e2a","children":[],"id":"92f20719ac8a","title":"设置堆的最大最小值 -xms -xmx

"},{"parent":"a7d301228e2a","children":[{"parent":"0715cab0f70c","children":[{"parent":"59b27c4fd939","children":[],"id":"e21f787b02c5","title":"防止年轻代堆收缩:老年代同理

"}],"id":"59b27c4fd939","title":"-XX:newSize设置绝对大小

"}],"id":"0715cab0f70c","title":"调整老年和年轻代的比例

"},{"parent":"a7d301228e2a","children":[],"id":"ff8918fe5f06","title":"主要看是否存在更多持久对象和临时对象

"},{"parent":"a7d301228e2a","children":[],"id":"24446fac73b9","title":"观察一段时间 看峰值老年代如何 不影响gc就加大年轻代

"},{"parent":"a7d301228e2a","children":[],"id":"9204d8b66e5f","title":"配置好的机器可以用 并发收集算法

"},{"parent":"a7d301228e2a","children":[],"id":"b58af9729fb9","title":"每个线程默认会开启1M的堆栈 存放栈帧 调用参数 局部变量 太大了 500k够了

"},{"parent":"a7d301228e2a","children":[],"id":"c001d0fcef30","title":"原则 就是减少GC STW

"}],"id":"a7d301228e2a","title":"性能调优

"},{"parent":"d61da867cb10","children":[{"parent":"febcbf010f6e","children":[],"id":"0e938c11383a","title":"jasvism

"},{"parent":"febcbf010f6e","children":[],"id":"64f7c1d4e0c1","title":"dump

"},{"parent":"febcbf010f6e","children":[],"id":"3ae88d77a8e0","title":"监控配置 自动dump

"}],"id":"febcbf010f6e","title":"FullGC 内存泄露排查

"},{"parent":"d61da867cb10","children":[{"parent":"163f2b3e6279","children":[{"parent":"341ea9d0a8b1","children":[{"parent":"7b2c9c719a5b","children":[],"id":"52bb357adfd0","title":"开启逃逸分析:-XX:+DoEscapeAnalysis

关闭逃逸分析:-XX:-DoEscapeAnalysis

显示分析结果:-XX:+PrintEscapeAnalysis

"}],"id":"7b2c9c719a5b","title":"Java Hotspot 虚拟机可以分析新创建对象的使用范围,并决定是否在 Java 堆上分配内存的一项技术

"}],"id":"341ea9d0a8b1","title":"概念

"},{"parent":"163f2b3e6279","children":[{"parent":"2951be8a5ab7","children":[{"parent":"19414f3dfc6b","children":[],"id":"b8919c87025b","title":"即一个对象的作用范围逃出了当前方法或者当前线程

"},{"parent":"19414f3dfc6b","children":[{"parent":"f3f5a4d41ca7","children":[],"id":"78e7003b3c6c","title":"对象是一个静态变量

"},{"parent":"f3f5a4d41ca7","children":[],"id":"57d7fe2eba87","title":"对象是一个已经发生逃逸的对象

"},{"parent":"f3f5a4d41ca7","children":[],"id":"f72de2af0484","title":"对象作为当前方法的返回值

"}],"id":"f3f5a4d41ca7","title":"场景

"}],"id":"19414f3dfc6b","title":"全局逃逸

"},{"parent":"2951be8a5ab7","children":[{"parent":"078cd7effe02","children":[],"id":"c4a9820ede62","title":"即一个对象被作为方法参数传递或者被参数引用,但在调用过程中不会发生全局逃逸

"}],"id":"078cd7effe02","title":"参数级逃逸

"},{"parent":"2951be8a5ab7","children":[{"parent":"dfb6626fa13e","children":[],"id":"a1f76aa518e6","title":"即方法中的对象没有发生逃逸

"}],"id":"dfb6626fa13e","title":"没有逃逸

"}],"id":"2951be8a5ab7","title":"逃逸状态

"},{"parent":"163f2b3e6279","children":[{"parent":"74538b6f0667","children":[{"parent":"22052ecf3777","children":[],"id":"1bb3c11ad593","title":"开启锁消除:-XX:+EliminateLocks

关闭锁消除:-XX:-EliminateLocks

"}],"id":"22052ecf3777","title":"锁消除

"},{"parent":"74538b6f0667","children":[{"parent":"d19fda364ab3","children":[],"id":"618f15119b55","title":"开启标量替换:-XX:+EliminateAllocations

关闭标量替换:-XX:-EliminateAllocations

显示标量替换详情:-XX:+PrintEliminateAllocations

"}],"id":"d19fda364ab3","title":"标量替换

"},{"parent":"74538b6f0667","children":[{"parent":"58e0672d4093","children":[],"id":"852d0a874d5c","title":"当对象没有发生逃逸时,该对象就可以通过标量替换分解成成员标量分配在栈内存中,和方法的生命周期一致,随着栈帧出栈时销毁,减少了 GC 压力,提高了应用程序性能

"}],"id":"58e0672d4093","title":"栈上分配

"}],"id":"74538b6f0667","title":"逃逸分析优化

"},{"parent":"163f2b3e6279","children":[{"parent":"0c9fe2ae4750","children":[],"id":"d4e70d6dd352","title":"在平时开发过程中尽可能的控制变量的作用范围了,变量范围越小越好,让虚拟机尽可能有优化的空间

"}],"id":"0c9fe2ae4750","title":"结论

"}],"id":"163f2b3e6279","title":"逃逸分析

"},{"parent":"d61da867cb10","children":[{"parent":"f01a444aff49","children":[{"parent":"e5b794712d9f","children":[{"parent":"69d4722f6204","children":[],"id":"9058d548b851","title":"当堆内存(Heap Space)没有足够空间存放新创建的对象时,会抛出

"},{"parent":"69d4722f6204","children":[{"parent":"82077529709e","children":[],"id":"e5efc4b324bd","title":"请求创建一个超大对象,通常是一个大数组

"},{"parent":"82077529709e","children":[],"id":"f6c6d999b2e2","title":"超出预期的访问量/数据量,通常是上游系统请求流量飙升,常见于各类促销/秒杀活动,可以结合业务流量指标排查是否有尖状峰值

"},{"parent":"82077529709e","children":[],"id":"810ed21d175e","title":"过度使用终结器(Finalizer),该对象没有立即被 GC

"},{"parent":"82077529709e","children":[],"id":"12f8716631c0","title":"内存泄漏(Memory Leak),大量对象引用没有释放,JVM 无法对其自动回收,常见于使用了 File 等资源没有回收

"}],"id":"82077529709e","title":"场景

"},{"parent":"69d4722f6204","children":[{"parent":"bb0032b74d7a","children":[],"id":"4508b1e1415b","title":"针对大部分情况,通常只需要通过 -Xmx 参数调高 JVM 堆内存空间即可

"},{"parent":"bb0032b74d7a","children":[],"id":"8a21793fb810","title":"如果是超大对象,可以检查其合理性,比如是否一次性查询了数据库全部结果,而没有做结果数限制

"},{"parent":"bb0032b74d7a","children":[],"id":"7970de1c13ce","title":"如果是业务峰值压力,可以考虑添加机器资源,或者做限流降级

"},{"parent":"bb0032b74d7a","children":[],"id":"61721dad15fd","title":"如果是内存泄漏,需要找到持有的对象,修改代码设计,比如关闭没有释放的连接

"}],"id":"bb0032b74d7a","title":"解决方案

"}],"id":"69d4722f6204","title":"Java heap space

"},{"parent":"e5b794712d9f","children":[{"parent":"16ea8d379757","children":[],"id":"089b25ad7859","title":"当 Java 进程花费 98% 以上的时间执行 GC,但只恢复了不到 2% 的内存,且该动作连续重复了 5 次,就会抛出

"},{"parent":"16ea8d379757","children":[],"id":"4cccd7722816","title":"场景与解决方案与Java heap space类似

"}],"id":"16ea8d379757","title":"GC overhead limit exceeded

"},{"parent":"e5b794712d9f","children":[{"parent":"27f250898d47","children":[],"id":"b5735a767281","title":"该错误表示永久代(Permanent Generation)已用满,通常是因为加载的 class 数目太多或体积太大

"},{"parent":"27f250898d47","children":[{"parent":"7adba9e7ee50","children":[],"id":"f92f654bc6f2","title":"程序启动报错,修改 -XX:MaxPermSize 启动参数,调大永久代空间

"},{"parent":"7adba9e7ee50","children":[],"id":"a1ba084f84ae","title":"应用重新部署时报错,很可能是没有应用没有重启,导致加载了多份 class 信息,只需重启 JVM 即可解决

"},{"parent":"7adba9e7ee50","children":[],"id":"ac1dd7e1efe5","title":"运行时报错,应用程序可能会动态创建大量 class,而这些 class 的生命周期很短暂,但是 JVM 默认不会卸载 class,可以设置 -XX:+CMSClassUnloadingEnabled 和 -XX:+UseConcMarkSweepGC这两个参数允许 JVM 卸载 class

"},{"parent":"7adba9e7ee50","children":[],"id":"9908b8d183cb","title":"如果上述方法无法解决,可以通过 jmap 命令 dump 内存对象 jmap-dump:format=b,file=dump.hprof<process-id> ,然后利用 Eclipse MAT功能逐一分析开销最大的 classloader 和重复 class

"}],"id":"7adba9e7ee50","title":"解决方案

"}],"id":"27f250898d47","title":"Permgen space

"},{"parent":"e5b794712d9f","children":[{"parent":"5d9794eb44e3","children":[],"id":"ff167c68cd26","title":"该错误表示 Metaspace 已被用满,通常是因为加载的 class 数目太多或体积太大

"},{"parent":"5d9794eb44e3","children":[],"id":"9e30222f9cdd","title":"场景与解决方案与Permgen space类似,需注意调整元空间大小参数为 -XX:MaxMetaspaceSize

"}],"id":"5d9794eb44e3","title":"Metaspace(元空间)

"},{"parent":"e5b794712d9f","children":[{"parent":"88733d6fd9e1","children":[],"id":"4b3170dc49e7","title":"当 JVM 向底层操作系统请求创建一个新的 Native 线程时,如果没有足够的资源分配就会报此类错误

"},{"parent":"88733d6fd9e1","children":[{"parent":"2de99f106fd9","children":[],"id":"d2894deebffe","title":"线程数超过操作系统最大线程数 ulimit 限制

"},{"parent":"2de99f106fd9","children":[],"id":"6b4a4957f1f7","title":"线程数超过 kernel.pid_max(只能重启)

"},{"parent":"2de99f106fd9","children":[],"id":"697aa13d5593","title":"Native 内存不足

"}],"id":"2de99f106fd9","title":"场景

"},{"parent":"88733d6fd9e1","children":[{"parent":"d6e225b4cdbd","children":[],"id":"8f7d0718c783","title":"升级配置,为机器提供更多的内存

"},{"parent":"d6e225b4cdbd","children":[],"id":"fd893dac8e71","title":"降低 Java Heap Space 大小

"},{"parent":"d6e225b4cdbd","children":[],"id":"96d3dc50bfcf","title":"修复应用程序的线程泄漏问题

"},{"parent":"d6e225b4cdbd","children":[],"id":"0ab47fa12a24","title":"限制线程池大小

"},{"parent":"d6e225b4cdbd","children":[],"id":"28f3069b69cc","title":"使用 -Xss 参数减少线程栈的大小

"},{"parent":"d6e225b4cdbd","children":[],"id":"5b123c27cf17","title":"调高 OS 层面的线程最大数:执行 ulimia-a 查看最大线程数限制,使用 ulimit-u xxx 调整最大线程数限制

"}],"id":"d6e225b4cdbd","title":"解决方案

"}],"id":"88733d6fd9e1","title":"Unable to create new native thread

"},{"parent":"e5b794712d9f","children":[{"parent":"a207e8502e59","children":[],"id":"ee75aeab507e","title":"虚拟内存(Virtual Memory)由物理内存(Physical Memory)和交换空间(Swap Space)两部分组成。当运行时程序请求的虚拟内存溢出时就会报 Outof swap space? 错误

"},{"parent":"a207e8502e59","children":[{"parent":"e9aff14f85ee","children":[],"id":"f5d926e1a54d","title":"地址空间不足

"},{"parent":"e9aff14f85ee","children":[],"id":"0ab3e7bd4ce0","title":"物理内存已耗光

"},{"parent":"e9aff14f85ee","children":[],"id":"a719e6915e62","title":"应用程序的本地内存泄漏(native leak),例如不断申请本地内存,却不释放

"},{"parent":"e9aff14f85ee","children":[],"id":"95178fc0d9c2","title":"执行 jmap-histo:live<pid> 命令,强制执行 Full GC;如果几次执行后内存明显下降,则基本确认为 Direct ByteBuffer 问题

"}],"id":"e9aff14f85ee","title":"场景

"},{"parent":"a207e8502e59","children":[{"parent":"69838d20af49","children":[],"id":"f6eb75443db8","title":"升级地址空间为 64 bit

"},{"parent":"69838d20af49","children":[],"id":"4c2d62f88cd0","title":"使用 Arthas 检查是否为 Inflater/Deflater 解压缩问题,如果是,则显式调用 end 方法

"},{"parent":"69838d20af49","children":[],"id":"8149b8d47a40","title":"Direct ByteBuffer 问题可以通过启动参数 -XX:MaxDirectMemorySize 调低阈值

"},{"parent":"69838d20af49","children":[],"id":"67cf9ef9b29d","title":"升级服务器配置/隔离部署,避免争用

"}],"id":"69838d20af49","title":"解决方案

"}],"id":"a207e8502e59","title":"Out of swap space?

"},{"parent":"e5b794712d9f","children":[{"parent":"fc5d0b351a1a","children":[],"id":"dcbc57e34a45","title":"有一种内核作业(Kernel Job)名为 Out of Memory Killer,它会在可用内存极低的情况下"杀死"(kill)某些进程。OOM Killer 会对所有进程进行打分,然后将评分较低的进程"杀死",Killprocessorsacrifice child 错误不是由 JVM 层面触发的,而是由操作系统层面触发的

"},{"parent":"fc5d0b351a1a","children":[{"parent":"959ac5e8a270","children":[],"id":"a97b65d73f88","title":"默认情况下,Linux 内核允许进程申请的内存总量大于系统可用内存,通过这种"错峰复用"的方式可以更有效的利用系统资源。

然而,这种方式也会无可避免地带来一定的"超卖"风险。例如某些进程持续占用系统内存,然后导致其他进程没有可用内存。此时,系统将自动激活 OOM Killer,寻找评分低的进程,并将其"杀死",释放内存资源

"}],"id":"959ac5e8a270","title":"场景

"},{"parent":"fc5d0b351a1a","children":[{"parent":"a7ada05bbd00","children":[],"id":"2b1d922217cf","title":"升级服务器配置/隔离部署,避免争用

"},{"parent":"a7ada05bbd00","children":[],"id":"b9502a1fe60b","title":"OOM Killer 调优

"}],"id":"a7ada05bbd00","title":"解决方案

"}],"id":"fc5d0b351a1a","title":"Kill process or sacrifice child

"},{"parent":"e5b794712d9f","children":[{"parent":"4dfd99c9bc90","children":[],"id":"52471cca5e33","title":"JVM 限制了数组的最大长度,该错误表示程序请求创建的数组超过最大长度限制

"},{"parent":"4dfd99c9bc90","children":[{"parent":"7a7846a84d01","children":[],"id":"ef42a376573a","title":"检查代码,确认业务是否需要创建如此大的数组,是否可以拆分为多个块,分批执行

"}],"id":"7a7846a84d01","title":"解决方案

"}],"id":"4dfd99c9bc90","title":"Requested array size exceeds VM limit

"},{"parent":"e5b794712d9f","children":[{"parent":"926260b12712","children":[],"id":"7deb1188a580","title":"Direct ByteBuffer 的默认大小为 64 MB,一旦使用超出限制,就会抛出 Directbuffer memory 错误

"},{"parent":"926260b12712","children":[{"parent":"c8bb4e5de982","children":[],"id":"558937529cce","title":"Java 只能通过 ByteBuffer.allocateDirect 方法使用 Direct ByteBuffer,因此,可以通过 Arthas 等在线诊断工具拦截该方法进行排查

"},{"parent":"c8bb4e5de982","children":[],"id":"04d0566021d0","title":"检查是否直接或间接使用了 NIO,如 netty,jetty 等

"},{"parent":"c8bb4e5de982","children":[],"id":"a5ff4af8fc72","title":"通过启动参数 -XX:MaxDirectMemorySize 调整 Direct ByteBuffer 的上限值

"},{"parent":"c8bb4e5de982","children":[],"id":"eb97fd5380b3","title":"检查 JVM 参数是否有 -XX:+DisableExplicitGC 选项,如果有就去掉,因为该参数会使 System.gc()失效

"},{"parent":"c8bb4e5de982","children":[],"id":"e19382936700","title":"检查堆外内存使用代码,确认是否存在内存泄漏;或者通过反射调用 sun.misc.Cleaner 的 clean() 方法来主动释放被 Direct ByteBuffer 持有的内存空间

"},{"parent":"c8bb4e5de982","children":[],"id":"b338bbfe6fec","title":"内存容量确实不足,升级配置

"}],"id":"c8bb4e5de982","title":"解决方案

"}],"id":"926260b12712","title":"Direct buffer memory

"}],"collapsed":false,"id":"e5b794712d9f","title":"OOM

"},{"parent":"f01a444aff49","children":[],"id":"4fca236e0053","title":"内存泄露"},{"parent":"f01a444aff49","children":[],"id":"31b72a753814","title":"线程死锁

"},{"parent":"f01a444aff49","children":[],"id":"f5310ade47dc","title":"锁争用

"},{"parent":"f01a444aff49","children":[],"id":"be43a23fb08e","title":"Java进程消耗CPU过高

"}],"id":"f01a444aff49","title":"JVM调优

"},{"parent":"d61da867cb10","children":[{"parent":"5c6067b84b1e","children":[],"id":"03fed9bf17f7","title":"Jconsole"},{"parent":"5c6067b84b1e","children":[],"id":"b0ec90eb542b","title":"Jprofiler"},{"parent":"5c6067b84b1e","children":[],"id":"baa86063ec3e","title":"jvisualvm"},{"parent":"5c6067b84b1e","children":[],"id":"c7c342ec412e","title":"MAT"}],"id":"5c6067b84b1e","title":"JVM性能检测工具"},{"parent":"d61da867cb10","children":[{"parent":"9a58c2256f22","children":[],"id":"541fa6a430b7","title":"help dump"},{"parent":"9a58c2256f22","children":[],"id":"63ed06e60104","title":"生产机 dump"},{"parent":"9a58c2256f22","children":[],"id":"29df5ce1b166","title":"mat"},{"parent":"9a58c2256f22","children":[],"id":"a2815d62f70f","title":"jmap"},{"parent":"9a58c2256f22","children":[],"id":"aa872dbc3855","title":"-helpdump"}],"id":"9a58c2256f22","title":"内存泄露"},{"parent":"d61da867cb10","children":[{"parent":"dbe9489e1531","children":[],"id":"30af4ef10e6d","title":"topc -c"},{"parent":"dbe9489e1531","children":[],"id":"f413c8870538","title":"top -Hp pid"},{"parent":"dbe9489e1531","children":[{"parent":"d7a7bb2d1ce7","children":[],"id":"3d609323d047","title":"进制转换"}],"id":"d7a7bb2d1ce7","title":"jstack"},{"parent":"dbe9489e1531","children":[],"id":"28dc67e9bcc8","title":"cat

"}],"id":"dbe9489e1531","title":"CPU100%"}],"collapsed":true,"id":"d61da867cb10","title":"JVM"},{"parent":"root","lineStyle":{"randomLineColor":"#FF8502"},"children":[],"id":"ecbf53fba47d","title":"多线程"},{"parent":"root","lineStyle":{"randomLineColor":"#F5479C"},"children":[{"parent":"d1383b5af44b","children":[{"parent":"6846db773567","children":[{"parent":"9cfcc064de09","children":[],"id":"3cc1cea3b5d2","title":"Spring 中的 Bean 默认都是单例的"}],"id":"9cfcc064de09","title":"单例模式"},{"parent":"6846db773567","children":[{"parent":"70e04d48b002","children":[],"id":"79b9ad909b14","title":"Spring使用工厂模式通过 BeanFactory、ApplicationContext 创建 bean 对象"}],"id":"70e04d48b002","title":"工厂模式"},{"parent":"6846db773567","children":[{"parent":"18539d17e1a2","children":[],"id":"f10b087651f0","title":"Spring AOP 的增强或通知(Advice)使用到了适配器模式、spring MVC 中也是用到了适配器模式适配Controller"}],"id":"18539d17e1a2","title":"适配器模式

"},{"parent":"6846db773567","children":[{"parent":"aa0d772a111e","children":[],"id":"458c4c117473","title":"Spring AOP 功能的实现"}],"id":"aa0d772a111e","title":"代理设计模式

"},{"parent":"6846db773567","children":[{"parent":"cf993fcce1fa","children":[],"id":"5a9fd93671a5","title":"Spring 事件驱动模型就是观察者模式很经典的一个应用"}],"id":"cf993fcce1fa","title":"观察者模式

"},{"parent":"6846db773567","children":[],"id":"4761f7394f26","title":"... ..."}],"id":"6846db773567","title":"设计模式"},{"parent":"d1383b5af44b","children":[{"parent":"6be9b379c857","children":[{"image":{"w":721,"h":306,"url":"http://cdn.processon.com/60d5841c07912920c8095f17?e=1624609325&token=trhI0BY8QfVrIGn9nENop6JAc6l5nZuxhjQ62UfM:vvIb8jvxokB9aizRUTjAy8Dz4NI="},"parent":"8218ea06bbce","children":[{"parent":"f7aea39c3b24","children":[],"id":"408ac2ef4844","title":"Bean 容器找到配置文件中 Spring Bean 的定义"},{"parent":"f7aea39c3b24","children":[],"id":"34992c358614","title":"Bean 容器利用 Java Reflection API 创建一个Bean的实例"},{"parent":"f7aea39c3b24","children":[],"id":"e7981f427e63","title":"如果涉及到一些属性值 利用 set()方法设置一些属性值"},{"parent":"f7aea39c3b24","children":[],"id":"dbf75a139571","title":"如果 Bean 实现了 BeanNameAware 接口,调用 setBeanName()方法,传入Bean的名字"},{"parent":"f7aea39c3b24","children":[],"id":"787517f23307","title":"如果 Bean 实现了 BeanClassLoaderAware 接口,调用 setBeanClassLoader()方法,传入 ClassLoader对象的实例"},{"parent":"f7aea39c3b24","children":[],"id":"6466197a3ddf","title":"如果Bean实现了 BeanFactoryAware 接口,调用 setBeanClassLoader()方法,传入 ClassLoader 对象的实例"},{"parent":"f7aea39c3b24","children":[],"id":"feac889b1b8d","title":"与上面的类似,如果实现了其他 *.Aware接口,就调用相应的方法"},{"parent":"f7aea39c3b24","children":[],"id":"91b027b10223","title":"如果有和加载这个 Bean 的 Spring 容器相关的 BeanPostProcessor 对象,执行postProcessBeforeInitialization() 方法"},{"parent":"f7aea39c3b24","children":[],"id":"b1af63401bf5","title":"如果Bean实现了InitializingBean接口,执行afterPropertiesSet()方法

"},{"parent":"f7aea39c3b24","children":[],"id":"37fa23a9c16a","title":"如果 Bean 在配置文件中的定义包含 init-method 属性,执行指定的方法"},{"parent":"f7aea39c3b24","children":[],"id":"b57d01a401e0","title":"如果有和加载这个 Bean的 Spring 容器相关的 BeanPostProcessor 对象,执行postProcessAfterInitialization() 方法"},{"parent":"f7aea39c3b24","children":[],"id":"baf7bd961172","title":"当要销毁 Bean 的时候,如果 Bean 实现了 DisposableBean 接口,执行 destroy() 方法"},{"parent":"f7aea39c3b24","children":[],"id":"da536275f445","title":"当要销毁 Bean 的时候,如果 Bean 在配置文件中的定义包含 destroy-method 属性,执行指定的方法"}],"style":{"text-align":"center"},"id":"f7aea39c3b24","title":"Spring Bean 生命周期"}],"id":"8218ea06bbce","title":"生命周期"},{"parent":"6be9b379c857","children":[{"parent":"780f6b30ba11","children":[{"parent":"69fa69a6315a","children":[],"id":"847478f4b37e","title":"唯一 bean 实例,Spring 中的 bean 默认都是单例的

"}],"id":"69fa69a6315a","title":"singleton

"},{"parent":"780f6b30ba11","children":[{"parent":"61779d764f15","children":[],"id":"1b7c8dc3e783","title":"每次请求都会创建一个新的 bean 实例

"}],"id":"61779d764f15","title":"prototype

"},{"parent":"780f6b30ba11","children":[{"parent":"b84f40579383","children":[],"id":"a2c0221f2a17","title":"每一次HTTP请求都会产生一个新的bean,该bean仅在当前HTTP request内有效

"}],"id":"b84f40579383","title":"request

"},{"parent":"780f6b30ba11","children":[{"parent":"2980654747ac","children":[],"id":"df20b0568d9b","title":"每一次HTTP请求都会产生一个新的 bean,该bean仅在当前 HTTP session 内有效

"}],"id":"2980654747ac","title":"session

"}],"id":"780f6b30ba11","title":"作用域"},{"parent":"6be9b379c857","children":[{"parent":"94d2392b941d","children":[],"id":"f6facb5c7bdf","title":"单例 bean 存在线程问题,主要是因为当多个线程操作同一个对象的时候,对这个对象的非静态成员变量的写操作会存在线程安全问题

"},{"parent":"94d2392b941d","children":[{"parent":"c50f84f9dd07","children":[],"id":"6a052cae7ac3","title":"在Bean对象中尽量避免定义可变的成员变量(不太现实)"},{"parent":"c50f84f9dd07","children":[],"id":"be83d8ee0d9c","title":"在类中定义一个ThreadLocal成员变量,将需要的可变成员变量保存在 ThreadLocal 中(推荐的一种方式)"}],"id":"c50f84f9dd07","title":"解决方案"}],"id":"94d2392b941d","title":"单例Bean线程不安全"}],"id":"6be9b379c857","title":"Bean"},{"parent":"d1383b5af44b","children":[{"parent":"2bd94a15f271","children":[{"parent":"db24e1d06ef6","children":[],"id":"c975e1461b17","title":"循环依赖其实就是循环引用,一个或多个对象实例之间存在直接或间接的依赖关系,这种依赖关系构成了构成一个环形调用"}],"id":"db24e1d06ef6","title":"定义"},{"parent":"2bd94a15f271","children":[{"parent":"6429ee8470d0","children":[{"parent":"b444d530df33","children":[],"id":"fe4447498b81","title":"可以解决"}],"id":"b444d530df33","title":"单例setter注入

"},{"parent":"6429ee8470d0","children":[{"parent":"a628a0c088fe","children":[],"id":"7d2affdf5098","title":"不能解决"}],"id":"a628a0c088fe","title":"多例setter注入"},{"parent":"6429ee8470d0","children":[{"parent":"52ccb73aaa1c","children":[],"id":"c5d72e9224fc","title":"不能解决"}],"id":"52ccb73aaa1c","title":"构造器注入"},{"parent":"6429ee8470d0","children":[{"parent":"e0ddcb98872d","children":[],"id":"8bc315c0e4f4","title":"有可能解决"}],"id":"e0ddcb98872d","title":"单例的代理对象注入

"},{"parent":"6429ee8470d0","children":[{"parent":"c1883b1d1d04","children":[],"id":"30c4d71d67b7","title":"不能解决"}],"id":"c1883b1d1d04","title":"DependOn循环依赖

"}],"id":"6429ee8470d0","title":"主要场景"},{"parent":"2bd94a15f271","children":[{"parent":"34656efe2153","children":[],"id":"07eedf3c5055","title":"一级缓存: 用于保存实例化、注入、初始化完成的bean实例

"},{"parent":"34656efe2153","children":[],"id":"5389148434c4","title":"二级缓存: 用于保存实例化完成的bean实例"},{"parent":"34656efe2153","children":[],"id":"de57eefcdc3f","title":"三级缓存: 用于保存bean创建工厂,以便于后面扩展有机会创建代理对象"}],"id":"34656efe2153","title":"三级缓存"},{"parent":"2bd94a15f271","children":[{"image":{"w":720,"h":309,"url":"http://cdn.processon.com/60d58be3e0b34d7f1166296f?e=1624611315&token=trhI0BY8QfVrIGn9nENop6JAc6l5nZuxhjQ62UfM:Szb4rjzK4-RP973_dTYTIc1x1yg="},"parent":"7537dcdb4537","children":[],"style":{"text-align":"center"},"id":"813c5d07800b","title":"Spring解决循环依赖"}],"id":"7537dcdb4537","title":"Spring如何解决循环依赖?"},{"parent":"2bd94a15f271","children":[{"parent":"6809a28c84fb","children":[{"parent":"0b65efef98be","children":[],"id":"edb8657d8633","title":"使用@Lazy注解,延迟加载

"},{"parent":"0b65efef98be","children":[],"id":"713bcafda8f8","title":"使用@DependsOn注解,指定加载先后关系

"},{"parent":"0b65efef98be","children":[],"id":"ecde0ac2eff5","title":"修改文件名称,改变循环依赖类的加载顺序

"}],"id":"0b65efef98be","title":"生成代理对象产生的循环依赖

"},{"parent":"6809a28c84fb","children":[{"parent":"19fc35c94a10","children":[],"id":"a898194da78e","title":"找到@DependsOn注解循环依赖的地方,迫使它不循环依赖

"}],"id":"19fc35c94a10","title":"使用@DependsOn产生的循环依赖

"},{"parent":"6809a28c84fb","children":[{"parent":"67569155e5e1","children":[],"id":"fbefaf05f15b","title":"把bean改成单例"}],"id":"67569155e5e1","title":"多例循环依赖"},{"parent":"6809a28c84fb","children":[{"parent":"d1721015c62e","children":[],"id":"086c2cf4a1f1","title":"使用@Lazy注解解决"}],"id":"d1721015c62e","title":"构造器循环依赖"}],"id":"6809a28c84fb","title":"Spring无法解决的循环依赖怎么解决?"}],"id":"2bd94a15f271","title":"循环依赖"},{"parent":"d1383b5af44b","children":[{"parent":"7525c1be5308","children":[],"id":"2efd2dd7603f","title":"Spring是父容器,SpringMVC是子容器,Spring父容器中注册的Bean对SpringMVC子容器是可见的,反之则不行"}],"id":"7525c1be5308","title":"父子容器"},{"parent":"d1383b5af44b","children":[{"parent":"2d661386d933","children":[],"id":"9dedda0f87c3","title":"采用不同的连接器"},{"parent":"2d661386d933","children":[{"parent":"9478703f6033","children":[],"id":"906c6c2186d9","title":"共享链接"}],"id":"9478703f6033","title":"用AOP 新建立了一个 链接"},{"parent":"2d661386d933","children":[],"id":"c0c888325014","title":"ThreadLocal 当前事务"},{"parent":"2d661386d933","children":[],"id":"cdf3bf19398a","title":"前提是 关闭AutoCommit"}],"id":"2d661386d933","title":"事务实现原理"},{"parent":"d1383b5af44b","children":[{"parent":"3fc3209ef908","children":[{"parent":"00348542df62","children":[{"parent":"7e65680e758d","children":[],"id":"9d4a3af2166d","title":"如果当前存在事务,则加入该事务;如果当前没有事务,则创建一个新的事务"}],"id":"7e65680e758d","title":"PROPAGATION_REQUIRED"},{"parent":"00348542df62","children":[{"parent":"fbcd20add78f","children":[],"id":"bb981fa897b5","title":"如果当前存在事务,则加入该事务;如果当前没有事务,则以非事务的方式继续运行"}],"id":"fbcd20add78f","title":"PROPAGATION_SUPPORTS"},{"parent":"00348542df62","children":[{"parent":"fa9c9e3e91b3","children":[],"id":"f0bd2ab03cb3","title":"如果当前存在事务,则加入该事务;如果当前没有事务,则抛出异常。(mandatory:强制性)"}],"id":"fa9c9e3e91b3","title":"PROPAGATION_MANDATORY"}],"id":"00348542df62","title":"支持当前事务的情况"},{"parent":"3fc3209ef908","children":[{"parent":"be43db2ade73","children":[{"parent":"274fe413f9a9","children":[],"id":"a9835b9de879","title":"创建一个新的事务,如果当前存在事务,则把当前事务挂起"}],"id":"274fe413f9a9","title":"PROPAGATION_REQUIRES_NEW"},{"parent":"be43db2ade73","children":[{"parent":"6de124ee0323","children":[],"id":"73808be05652","title":"以非事务方式运行,如果当前存在事务,则把当前事务挂起"}],"id":"6de124ee0323","title":"PROPAGATION_NOT_SUPPORTED"},{"parent":"be43db2ade73","children":[{"parent":"b7f5bd597d94","children":[],"id":"aade3bc122d2","title":"以非事务方式运行,如果当前存在事务,则抛出异常"}],"id":"b7f5bd597d94","title":"PROPAGATION_NEVER"}],"id":"be43db2ade73","title":"不支持当前事务的情况"},{"parent":"3fc3209ef908","children":[{"parent":"c633fc129e54","children":[{"parent":"7f933d08995d","children":[],"id":"29d2241160ac","title":"如果当前存在事务,则创建一个事务作为当前事务的嵌套事务来运行;如果当前没有事务,则该取值等价于PROPAGATION_REQUIRED"}],"id":"7f933d08995d","title":"PROPAGATION_NESTED"}],"id":"c633fc129e54","title":"其他情况"}],"id":"3fc3209ef908","title":"事务的传播行为"},{"parent":"d1383b5af44b","children":[{"parent":"d09326c239bc","children":[{"parent":"0ddcc4fc0973","children":[],"id":"2b143ba58aa6","title":"实现类"}],"id":"0ddcc4fc0973","title":"静态代理"},{"parent":"d09326c239bc","children":[{"parent":"e88e55b4f4a1","children":[{"parent":"93e7c1779448","children":[{"parent":"2b790def7035","children":[{"parent":"8aadeb882937","children":[],"id":"b8325d0d990f","title":"调用具体方法的时候调用invokeHandler"}],"id":"8aadeb882937","title":"java反射机制生成一个代理接口的匿名类"}],"id":"2b790def7035","title":"实现接口"}],"id":"93e7c1779448","title":"JDK动态代理"},{"parent":"e88e55b4f4a1","children":[{"parent":"ad4aa9aa8708","children":[{"parent":"d62f18134c3b","children":[],"id":"f4f870f8a078","title":"修改字节码生成子类去处理"}],"id":"d62f18134c3b","title":"asm字节码编辑技术动态创建类 基于classLoad装载"}],"id":"ad4aa9aa8708","title":"cglib"}],"id":"e88e55b4f4a1","title":"动态代理"}],"id":"d09326c239bc","title":"AOP"},{"parent":"d1383b5af44b","children":[],"id":"321b75efaafa","title":"IOC"}],"collapsed":true,"id":"d1383b5af44b","title":"Spring

"},{"parent":"root","lineStyle":{"randomLineColor":"#4D69FD"},"children":[{"parent":"93b7f849fef3","children":[{"parent":"2d833717c2db","children":[{"parent":"688bd93ca78f","children":[{"parent":"adb794ebf462","children":[],"id":"28b48a3fc7d9","title":"MVCC支持高并发、四个隔离级别(默认为可重复读)、支持事务操作、聚簇索引

"}],"id":"adb794ebf462","title":"InnoDB

"},{"parent":"688bd93ca78f","children":[{"parent":"9851e7c98465","children":[],"id":"6cda6ad38022","title":"全文索引、压缩、空间函数、崩溃后无法安全恢复

"}],"id":"9851e7c98465","title":"MyISAM

"}],"id":"688bd93ca78f","title":"常见"},{"parent":"2d833717c2db","children":[{"parent":"259d8b591849","children":[{"parent":"8af8eb9d1749","children":[],"id":"faac28b5b4bf","title":"只支持insert、select操作,适合日志和数据采集

"}],"id":"8af8eb9d1749","title":"Archive

"},{"parent":"259d8b591849","children":[{"parent":"246578695747","children":[],"id":"6b252c309014","title":"会丢弃所有插入数据,不做保存,记录Blackhole日志,可以用于复制数据库到备份库

"}],"id":"246578695747","title":"Blackhole

"},{"parent":"259d8b591849","children":[{"parent":"22c9f95864a3","children":[],"id":"c91fe24e6951","title":"可以将CSV文件作为MySQL表处理,不支持索引

"}],"id":"22c9f95864a3","title":"CSV

"},{"parent":"259d8b591849","children":[{"parent":"4c62ccaebd05","children":[],"id":"1707d3b04d53","title":"访问MySQL服务器的一个代理,创建远程到MySQL服务器的客户端连接,默认禁用

"}],"id":"4c62ccaebd05","title":"Federated

"},{"parent":"259d8b591849","children":[{"parent":"7c87e4ff16d1","children":[],"id":"66796cc84a6b","title":"数据保存在内存中,不需要磁盘I/O,重启后数据会丢失但是表结构会保留

"}],"id":"7c87e4ff16d1","title":"Memory

"},{"parent":"259d8b591849","children":[{"parent":"7e8ec900b7d9","children":[],"id":"260f221a82a2","title":"MyISAM变种,可以用于日志或数据仓库,已被放弃

"}],"id":"7e8ec900b7d9","title":"Merge

"},{"parent":"259d8b591849","children":[{"parent":"deaf720f74d6","children":[],"id":"f24022cd65fd","title":"集群引擎

"}],"id":"deaf720f74d6","title":"NDB

"}],"id":"259d8b591849","title":"其他(可做了解)"}],"collapsed":false,"id":"2d833717c2db","title":"存储引擎"},{"parent":"93b7f849fef3","children":[{"parent":"a5966d5788f2","children":[{"parent":"7adc8a1c18d2","children":[],"id":"a4370f67b89b","title":"binlog记录了数据库表结构和表数据变更,比如update/delete/insert/truncate/create

"},{"parent":"7adc8a1c18d2","children":[],"id":"4aade95fc08c","title":"主要用来复制和恢复数据"}],"id":"7adc8a1c18d2","title":"binlog

"},{"parent":"a5966d5788f2","children":[{"parent":"7577f673f85c","children":[],"id":"31aeaae43467","title":"在写入内存后会产生redo log,记录本次在某个页上做了什么修改

"},{"parent":"7577f673f85c","children":[],"id":"142d34087f0c","title":"恢复写入内存但数据还没真正写到磁盘的数据,redo log记载的是物理变化,文件的体积很小,恢复速度很快

"}],"id":"7577f673f85c","title":"redo log

"},{"parent":"a5966d5788f2","children":[{"parent":"4814832d3190","children":[],"id":"c90806ae3f83","title":"undo log是逻辑日志,存储着修改之前的数据,相当于一个前版本

"},{"parent":"4814832d3190","children":[],"id":"99805bbdffab","title":"用来回滚和多版本控制"}],"id":"4814832d3190","title":"undo log

"},{"parent":"a5966d5788f2","children":[{"parent":"5dfacdc162c1","children":[],"id":"8d3250892fd5","title":"redo log 记录的是数据的物理变化,binlog 记录的是数据的逻辑变化"},{"parent":"5dfacdc162c1","children":[],"id":"42cefe6fd536","title":"redo log的作用是为持久化而生的,仅存储写入内存但还未刷到磁盘的数据;binlog的作用是复制和恢复而生的,保持主从数据库的一致性,如果整个数据库的数据都被删除了,可以通过binlog恢复,而redo log则不能

"},{"parent":"5dfacdc162c1","children":[],"id":"5590b5b15841","title":"redo log是MySQL的InnoDB引擎所产生的;binlog无论MySQL用什么引擎,都会有的"},{"parent":"5dfacdc162c1","children":[{"parent":"fa7e2894f9c9","children":[{"parent":"b2f9cb6a36f3","children":[{"parent":"be9575df9c26","children":[],"id":"8b3e3c83afb7","title":"如果写redo log失败了,那我们就认为这次事务有问题,回滚,不再写binlog"},{"parent":"be9575df9c26","children":[],"id":"a1220b7a6966","title":"如果写redo log成功了,写binlog,写binlog写一半了,但失败了怎么办?我们还是会对这次的事务回滚,将无效的binlog给删除(因为binlog会影响从库的数据,所以需要做删除操作)"},{"parent":"be9575df9c26","children":[],"id":"122c9e026c22","title":"如果写redo log和binlog都成功了,那这次算是事务才会真正成功"}],"id":"be9575df9c26","title":"解析"},{"parent":"b2f9cb6a36f3","children":[{"parent":"be4d6b8613d4","children":[{"parent":"b17221822991","children":[],"id":"9383dc884572","title":"如果redo log写失败了,而binlog写成功了。那假设内存的数据还没来得及落磁盘,机器就挂掉了。那主从服务器的数据就不一致了。(从服务器通过binlog得到最新的数据,而主服务器由于redo log没有记载,没法恢复数据)"},{"parent":"b17221822991","children":[],"id":"4f824c63c2b0","title":"如果redo log写成功了,而binlog写失败了。那从服务器就拿不到最新的数据了"}],"id":"b17221822991","title":"MySQL需要保证redo log和binlog的数据是一致的"}],"id":"be4d6b8613d4","title":"结论"},{"parent":"b2f9cb6a36f3","children":[{"parent":"4c6de79ecd53","children":[{"parent":"9f54d23f7748","children":[{"parent":"4387d2b41401","children":[],"id":"a4c2899d7ffa","title":"阶段1:InnoDBredo log 写盘,InnoDB 事务进入 prepare(做好准备) 状态"},{"parent":"4387d2b41401","children":[],"id":"05dea48990c1","title":"阶段2:binlog 写盘,InooDB 事务进入 commit(提交) 状态"},{"parent":"4387d2b41401","children":[],"id":"7e939c251666","title":"每个事务binlog的末尾,会记录一个 XID event,标志着事务是否提交成功,也就是说,恢复过程中,binlog 最后一个 XID event 之后的内容都应该被 purge(清除)"}],"id":"4387d2b41401","title":"过程"}],"id":"9f54d23f7748","title":"MySQL通过两阶段提交来保证redo log和binlog的数据是一致的"}],"id":"4c6de79ecd53","title":"保持一致性的方法"}],"id":"b2f9cb6a36f3","title":"引申问题:在写入某一个log,失败了,那会怎么办?比如先写redo log,再写binlog"}],"id":"fa7e2894f9c9","title":"redo log事务开始的时候,就开始记录每次的变更信息,而binlog是在事务提交的时候才记录"}],"id":"5dfacdc162c1","title":"binlog与redo log的区别"},{"parent":"a5966d5788f2","children":[{"parent":"cea3499ae4ce","children":[{"parent":"e50c2afacca9","children":[],"id":"18fce611a383","title":"默认不开启,需要手动将参数设置为ON"}],"id":"e50c2afacca9","title":"慢查询日志,记录所有执行时间超过long_query_time的所有查询或不使用索引的查询

"}],"id":"cea3499ae4ce","title":"slow log

"}],"collapsed":false,"id":"a5966d5788f2","title":"log"},{"parent":"93b7f849fef3","children":[{"parent":"5210cc3e2201","children":[{"parent":"9901dd2630fa","children":[{"parent":"f2714fc25cf8","children":[{"parent":"72e55f7388bc","children":[],"id":"efa64c497a6e","title":"B树在提高了IO性能的同时并没有解决元素遍历的效率低下的问题,为了解决这个问题产生了B+树。B+树只需要去遍历叶子节点就可以实现整棵树的遍历。而在数据库中基于范围的查询是非常频繁的,但B树不支持这样的操作或者说效率太低"}],"id":"72e55f7388bc","title":"为什么选用B+Tree不选择B-Tree?"},{"parent":"f2714fc25cf8","children":[{"parent":"118574a529fa","children":[{"parent":"e98c2b0bce63","children":[],"id":"70ba3b2ec259","title":"和索引中的所有列进行匹配"}],"id":"e98c2b0bce63","title":"全值匹配"},{"parent":"118574a529fa","children":[{"parent":"f6fe4bbe8879","children":[],"id":"6650dea74984","title":"只使用索引的第一列"}],"id":"f6fe4bbe8879","title":"匹配最左前缀"},{"parent":"118574a529fa","children":[{"parent":"a8e32170dad9","children":[],"id":"0736d8171b6b","title":"只匹配某一列值的开头部分"}],"id":"a8e32170dad9","title":"匹配列前缀"},{"parent":"118574a529fa","children":[{"parent":"c3ecae94375c","children":[],"id":"d6ce88217808","title":"查找范围区间"}],"id":"c3ecae94375c","title":"匹配范围值"},{"parent":"118574a529fa","children":[{"parent":"f7a85f0b29a2","children":[],"id":"f2c263321a5b","title":"第一列全匹配,第二列匹配范围区间"}],"id":"f7a85f0b29a2","title":"精确匹配某一列并范围匹配另外一列"},{"parent":"118574a529fa","children":[{"parent":"00e02f358513","children":[],"id":"7eaacfcc4407","title":"覆盖索引"}],"id":"00e02f358513","title":"只访问索引的查询"}],"id":"118574a529fa","title":"适用范围"}],"id":"f2714fc25cf8","title":"B+Tree 索引"},{"parent":"9901dd2630fa","children":[{"parent":"079407222137","children":[{"parent":"2961e3444d75","children":[],"id":"9d63f5055547","title":"只有精确匹配索引所有列的查询才有效"}],"id":"2961e3444d75","title":"等值查询"}],"id":"079407222137","title":"Hash 索引"},{"parent":"9901dd2630fa","children":[{"parent":"ac2bb051dc41","children":[],"id":"b1fc3ee864fd","title":"MyISAM表支持,可以用作地理数据存储"}],"id":"ac2bb051dc41","title":"R- Tree 索引(空间数据索引)"},{"parent":"9901dd2630fa","children":[{"parent":"98bc7018f2e8","children":[],"id":"74d8f80862a6","title":"MyISAM表支持,查找文本中的关键字"}],"id":"98bc7018f2e8","title":"全文索引"}],"id":"9901dd2630fa","title":"常见索引"},{"parent":"5210cc3e2201","children":[{"parent":"eb2a05c840d5","children":[],"id":"38870688a881","title":"InnoDB通过主键聚集数据,若没有主键则会选择一个唯一非空索引代替,若都不存在则会隐式定义一个主键来作为聚簇索引"}],"id":"eb2a05c840d5","title":"聚簇索引"},{"parent":"5210cc3e2201","children":[{"parent":"c1563189924d","children":[],"id":"13d6a347d2e2","title":"会多进行一次扫描(回表操作)"}],"id":"c1563189924d","title":"非聚簇索引"},{"parent":"5210cc3e2201","children":[{"parent":"1c121f6da170","children":[{"parent":"e0e8ecd756d7","children":[],"id":"f3837dae5ad5","title":"索引不能是表达式的一部分,也不能是函数的参数

"}],"id":"e0e8ecd756d7","title":"独立的列"},{"parent":"1c121f6da170","children":[{"parent":"a3bd55e14de1","children":[],"id":"043d3ebd14c6","title":"在需要使用多列作为查询条件时,联合索引比使用多个单列索引性能更好

"}],"id":"a3bd55e14de1","title":"多列索引"},{"parent":"1c121f6da170","children":[{"parent":"d28f309facf7","children":[],"id":"cf867ae6885b","title":"将选择行最强的索引列放在最前面

"},{"parent":"d28f309facf7","children":[],"id":"977d01e8182f","title":"索引的选择性:不重复的索引值和记录总数的对比

"}],"id":"d28f309facf7","title":"索引列的顺序"},{"parent":"1c121f6da170","children":[{"parent":"6ed32658245b","children":[],"id":"a735cff03984","title":"对BLOG、TEXT、VARCHAR类型的列,使用前缀索引,索引开始的部分字符

"},{"parent":"6ed32658245b","children":[],"id":"9af199030361","title":"前缀索引的长度选取,需根据索引的选择性来确定

"}],"id":"6ed32658245b","title":"前缀索引"},{"parent":"1c121f6da170","children":[{"parent":"861978fd7ef6","children":[],"id":"94eacfaadf23","title":"索引包含所有需要查询的字段值"},{"parent":"861978fd7ef6","children":[{"parent":"5d4e9d8774fd","children":[],"id":"5d9d32175efc","title":"索引远小于数据行的大小,只读取索引能够减少数据访问量

"},{"parent":"5d4e9d8774fd","children":[],"id":"858036b36f18","title":"不用回表"}],"id":"5d4e9d8774fd","title":"优点"}],"id":"861978fd7ef6","title":"覆盖索引"}],"id":"1c121f6da170","title":"索引优化"},{"parent":"5210cc3e2201","children":[{"parent":"a88af8d55657","children":[],"id":"d05b0626264c","title":"大大减少了服务器需要扫描的数据行数"},{"parent":"a88af8d55657","children":[],"id":"f685636c3846","title":"帮助服务器避免进行排序和分组,以及避免创建临时表"},{"parent":"a88af8d55657","children":[],"id":"3df58d5d4a3b","title":"将随机 I/O 变为顺序 I/O"}],"id":"a88af8d55657","title":"索引的优点"},{"parent":"5210cc3e2201","children":[{"parent":"1792cb752801","children":[{"parent":"cef88efe00e5","children":[],"id":"c1e59b66fefb","title":"因为如果不是覆盖索引需要回表"}],"id":"cef88efe00e5","title":"对于非常小的表、大部分情况下简单的全表扫描比建立索引更高效"},{"parent":"1792cb752801","children":[],"id":"64956008bace","title":"对于中到大型的表,索引非常有效"},{"parent":"1792cb752801","children":[],"id":"4070060fdb7d","title":"对于特大型的表,建立和维护索引的代价将会随之增长。这种情况下,需要用到一种技术可以直接区分出需要查询的一组数据,而不是一条记录一条记录地匹配,例如可以使用分区技术"}],"id":"1792cb752801","title":"使用条件"}],"collapsed":false,"id":"5210cc3e2201","title":"索引"},{"parent":"93b7f849fef3","children":[{"parent":"1a7dcec2ca47","children":[{"parent":"85c67aaf34a6","children":[{"parent":"35c936f7bdfa","children":[],"id":"a596da0b2488","title":"SIMPLE 简单查询"},{"parent":"35c936f7bdfa","children":[],"id":"f4b9fe60c553","title":"UNION 联合查询"},{"parent":"35c936f7bdfa","children":[],"id":"5f4c581eaea4","title":"SUBQUERY 子查询"}],"id":"35c936f7bdfa","title":"select_type"},{"parent":"85c67aaf34a6","children":[{"parent":"46d559313322","children":[],"id":"c4ee393f6a07","title":"查询的表"}],"id":"46d559313322","title":"table"},{"parent":"85c67aaf34a6","children":[{"parent":"a7b85d2883a5","children":[],"id":"b616d85f45b2","title":"system"},{"parent":"a7b85d2883a5","children":[{"parent":"35007f4ba57f","children":[],"id":"f1988a49fc81","title":"只有一条查询结果&主键/唯一索引"}],"id":"35007f4ba57f","title":"const"},{"parent":"a7b85d2883a5","children":[{"parent":"ad8c20fd30de","children":[],"id":"96881f188e51","title":"链接查询&主键/唯一索引&只有一条查询结果"}],"id":"ad8c20fd30de","title":"eq_ref"},{"parent":"a7b85d2883a5","children":[{"parent":"f42e8c179697","children":[],"id":"f02141ac36a6","title":"非唯一索引"}],"id":"f42e8c179697","title":"ref"},{"parent":"a7b85d2883a5","children":[{"parent":"43897738682a","children":[],"id":"910cd850cb27","title":"使用索引进行范围查询时"}],"id":"43897738682a","title":"range"},{"parent":"a7b85d2883a5","children":[{"parent":"8fef129a816a","children":[],"id":"200bdd342f1e","title":"查询的字段时索引的一部分,覆盖索引"},{"parent":"8fef129a816a","children":[],"id":"462b089e56d4","title":"使用主键排序"}],"id":"8fef129a816a","title":"index"},{"parent":"a7b85d2883a5","children":[{"parent":"1bb5aaa0dd0d","children":[],"id":"764244bd2a17","title":"全表扫描"}],"id":"1bb5aaa0dd0d","title":"all"}],"id":"a7b85d2883a5","title":"type"},{"parent":"85c67aaf34a6","children":[{"parent":"b31b3a54b63b","children":[],"id":"dde0162686c2","title":"可选择的索引"}],"id":"b31b3a54b63b","title":"possible_keys"},{"parent":"85c67aaf34a6","children":[{"parent":"81ad0f7b17de","children":[],"id":"905080a6f852","title":"实际使用的索引"}],"id":"81ad0f7b17de","title":"key"},{"parent":"85c67aaf34a6","children":[{"parent":"e313359e3213","children":[],"id":"9094ee686fb6","title":"扫描的行数"}],"id":"e313359e3213","title":"rows"}],"id":"85c67aaf34a6","title":"使用 Explain 分析 Select 查询语句

"},{"parent":"1a7dcec2ca47","children":[{"parent":"766730b3f63f","children":[{"parent":"e4c6eb7dee1d","children":[],"id":"f00841c92b2d","title":"只查询必要的列,对使用*永远持怀疑态度"},{"parent":"e4c6eb7dee1d","children":[],"id":"6396af513501","title":"只返回必要的行,使用Limit限制返回行数"},{"parent":"e4c6eb7dee1d","children":[],"id":"e69aa7cfb00a","title":"缓存重复查询的数据,例如使用redis"}],"id":"e4c6eb7dee1d","title":"减少请求的数据量"},{"parent":"766730b3f63f","children":[{"parent":"64889a19144a","children":[],"id":"e3d6b04e7263","title":"使用索引覆盖查询"}],"id":"64889a19144a","title":"减少数据库扫描的行数"}],"id":"766730b3f63f","title":"优化数据访问"},{"parent":"1a7dcec2ca47","children":[{"parent":"879ad88fe515","children":[],"id":"4fcee3d0c287","title":"有时候一个复杂的查询并没有多个简单查询执行迅速"},{"parent":"879ad88fe515","children":[],"id":"344a37829ef0","title":"对于大查询,可以使用切分查询,每次只返回一小部分"},{"parent":"879ad88fe515","children":[],"id":"fd9207da3a3a","title":"对于关联查询,可根据情况进行分解,在应用程序中进行关联"},{"parent":"879ad88fe515","children":[],"id":"27dc6dbe2891","title":"对于大分页等场景, 可采用延迟关联

"}],"id":"879ad88fe515","title":"重构查询方式"}],"collapsed":false,"id":"1a7dcec2ca47","title":"查询性能优化"},{"parent":"93b7f849fef3","children":[{"parent":"f349bf5b5c84","children":[{"parent":"8a0fefaa1a67","children":[],"id":"9efbed658957","title":"原子性